When syncing files from SharePoint to Azure Blob Storage using Logic Apps, everything looks smooth — until you hit folder-level deletes.

SharePoint sends event triggers like “Folder Deleted” but doesn’t include which files were inside. That means you can’t easily clean up those files in Blob Storage.

⚠️ The Problem

For example, if a folder /Projects/2025/Q1 is deleted in SharePoint, the Logic App only tells you the leaf folder name — not the list of files it contained. So unless you have a mapping system, those blobs remain orphaned in storage.

💡 The Approach

To solve this, I decided to store SharePoint metadata directly on each blob.

When a file is uploaded via Logic App, I tag it with:

| Metadata Key | Description |

|---|---|

sp_doc_id |

Original SharePoint Document ID |

sp_path |

Full file path in SharePoint |

sp_site |

Site or document library name |

synced_on |

Timestamp of the sync |

This makes Azure Blob act as a metadata-aware mirror of SharePoint.

⚙️ The Blob Metadata Search Function

To make this metadata searchable, I built an Azure Function named BlobMetadataSearch.

It allows you to query blobs in a container using multiple filters, logical conditions. Metadata values are stored in Base64 format to safely handle special characters, and the function automatically decodes them during search.

Example Request

{

"container": "sharepointsync",

"filters": [

{ "key": "sp_path", "value": "Q1", "filterType": "contains", "condition": "and" }

]

}

🧠 Function Logic (C#)

[Function("BlobMetadataSearch")]

public async Task<IActionResult> RunAsync([HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequest req)

{

var input = JsonSerializer.Deserialize<SearchMetadataRequest>(

await new StreamReader(req.Body).ReadToEndAsync(),

new JsonSerializerOptions { PropertyNameCaseInsensitive = true });

if (string.IsNullOrWhiteSpace(input?.Container))

return new BadRequestObjectResult("Container is required.");

var container = _blobServiceClient.GetBlobContainerClient(input.Container);

var results = new List<BlobSearchResult>();

var prefix = string.IsNullOrWhiteSpace(input.SubFolder) ? null : input.SubFolder.TrimEnd("https://dev.to/") + "https://dev.to/";

await foreach (var blobItem in container.GetBlobsAsync(BlobTraits.Metadata, prefix: prefix))

{

var metadata = blobItem.Metadata.ToDictionary(k => k.Key.ToLowerInvariant(), v => v.Value);

bool? cumulative = null;

if (input.Filters?.Any() == true)

{

foreach (var f in input.Filters)

{

var key = f.Key.ToLowerInvariant();

var filterVal = f.Value ?? string.Empty;

var match = metadata.TryGetValue(key, out var stored) &&

(f.FilterType?.ToLowerInvariant() switch

{

"equals" => string.Equals(stored, filterVal, StringComparison.OrdinalIgnoreCase),

"contains" => stored.Contains(filterVal, StringComparison.OrdinalIgnoreCase),

_ => false

});

cumulative = cumulative is null ? match : (f.Condition?.ToLowerInvariant() switch

{

"or" => cumulative.Value || match,

"and" => cumulative.Value && match,

_ => cumulative.Value && match

});

}

}

if (cumulative == true)

results.Add(new BlobSearchResult

{

FileName = Path.GetFileName(blobItem.Name),

FilePath = blobItem.Name,

BlobUrl = $"{_blobServiceClient.Uri}{input.Container}/{Uri.EscapeDataString(blobItem.Name)}",

Metadata = metadata

});

}

return new OkObjectResult(results);

}

🔄 How It Fits with Logic Apps

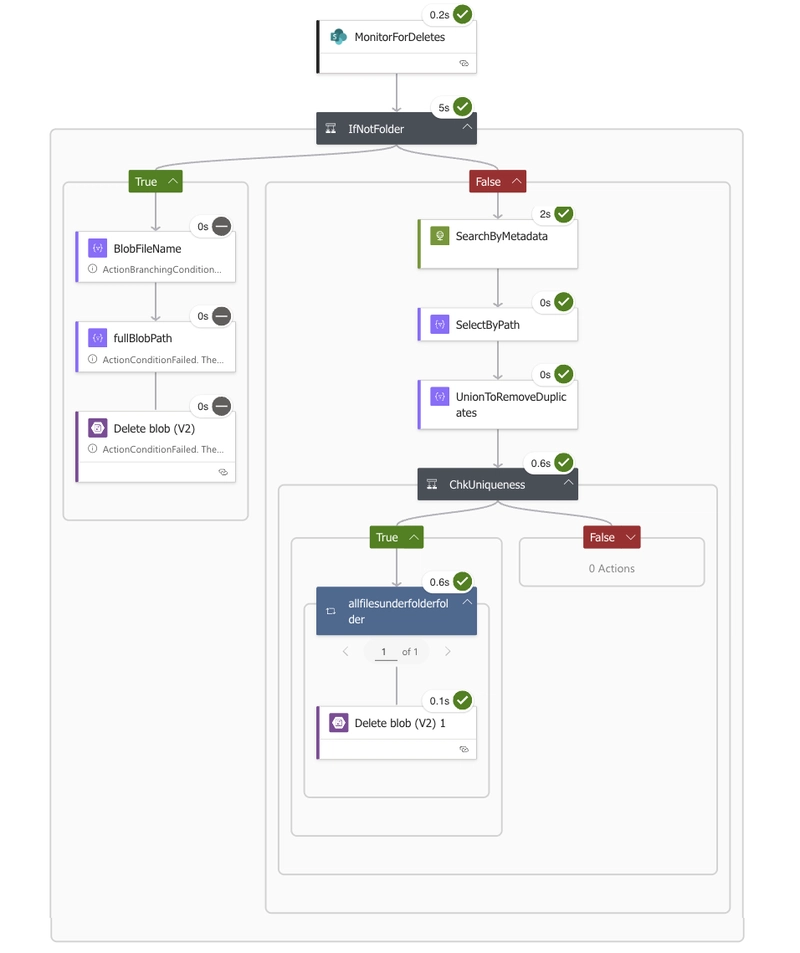

🧭 Simplified Flow

SharePoint Event ──▶ Logic App ──▶ BlobMetadataSearch (Azure Function) ──▶ List of matched blobs ──▶ Delete each blob from Storage

The Logic App triggers on SharePoint events, calls the Azure Function to find related blobs via metadata, and removes them from storage (and optionally Azure AI Search).

In the Logic App, whenever a delete event comes from SharePoint:

- If it’s a file event → delete that blob directly.

-

If it’s a folder event →

- Call the

BlobMetadataSearchfunction via HTTP action. - Filter blobs where

sp_pathcontains that folder path. - Loop through the returned results and delete them.

- Call the

This ensures your Blob Storage stays in perfect sync with SharePoint, even when only folder-level events are received.

🧩 Benefits

✅ Keeps SharePoint and Blob Storage consistent

✅ Eliminates orphaned or outdated files

✅ Simplifies cleanup logic in Logic Apps

💬 Summary

By combining Logic Apps, SharePoint events, and a custom Blob Metadata Search Function, you can build a robust and maintainable document sync pipeline. Instead of managing complex mappings, just store smart metadata — and let Azure do the searching for you.