Contents:

- Introduction

- Problem

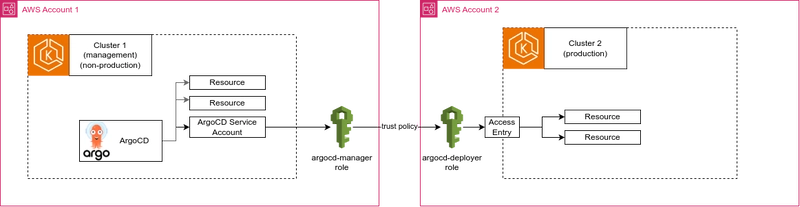

- AWS Multi-Account infrastructure with 2 EKS clusters

- ArgoCD in cluster 1 and manages cluster 2 as well

- Need for a secure access to a second cluster

- Diagram

3.Challenge

- No peering and no communication allowed between two clusters

4.Solution

- IAM OIDC provider configured for the cluster

- Implement access via IAM roles, IRSA and EKS IAM Access Entries

- IAM roles for first account and second account, policies

- Configure ArgoCD SA

- Configure ArgoCD helm values

- Verify access

5.Conclusion

Introduction

In this article, we’ll explore how to set up a multi-cluster access for ArgoCD via IAM roles for Service Accounts (IRSA) to be more detailed how to securely enable ArgoCD to manage multiple EKS clusters across AWS accounts using IAM Roles for Service Accounts (IRSA).

Why this matters: As organizations scale their Kubernetes workloads, GitOps needs to scale with them. ArgoCD + IRSA provides a secure, credential-free way to manage many clusters from a single control plane.

Problem

Adopting a multi-account strategy in AWS – separating Production and Non-Production environments – is a widely recommended approach that strengthens security, simplifies resource management, and enables more granular access control. Our infrastructure followed this pattern, with each environment hosted in its own AWS account and running its own EKS cluster.

Initially, we had a single EKS cluster where ArgoCD was installed and operating in in-cluster mode, deploying and managing workloads within the same cluster. However, to strengthen isolation and follow AWS security best practices, we migrated to a multi-account setup. Each environment runs its own EKS cluster in a separate AWS account — introducing the need for secure cross-cluster, cross-account deployment.

This presented a new challenge: how to securely enable ArgoCD, residing in Cluster 1 (e.g., Production (managed internal cluster)), to manage deployments in Cluster 2 (e.g., Production). The need for cross-cluster, cross-account deployment introduced several security and configuration considerations, particularly around authentication, access control, and network reachability.

Our goal was to implement this multi-cluster management securely, ensuring that ArgoCD could interact with the second cluster without compromising the isolation and integrity of either environment.

Solution

This setup requires:

- IRSA is enabled on your ArgoCD EKS cluster

- An IAM role (“management role”) for your ArgoCD EKS cluster that has an appropriate trust policy and permission policies

- A role created for the second cluster being added to ArgoCD that is assumable by the ArgoCD management role

- An Access Entry within each EKS cluster is added to ArgoCD that gives the cluster’s role RBAC permissions to perform actions within the cluster

Step 1. Verify that IRSA is enabled on your main (management) EKS cluster

Determine the OIDC issuer ID for your cluster: Retrieve your cluster’s OIDC issuer ID and store it in a variable.

cluster_name=

oidc_id=$(aws eks describe-cluster --name $cluster_name --query "cluster.identity.oidc.issuer" --output text | cut -d "https://dev.to/" -f 5)

echo $oidc_id

Determine whether an IAM OIDC provider with your cluster’s issuer ID is already in your account.

aws iam list-open-id-connect-providers | grep $oidc_id | cut -d "https://dev.to/" -f4

If output is returned, then you already have an IAM OIDC provider for your cluster and you can skip the next step. If no output is returned, then you must create an IAM OIDC provider for your cluster.

Step 2. Create the ArgoCD Management role and ArgoCD Deployer role

The role created for Argo CD (the “management role”) will need to have a trust policy suitable for assumption by certain Argo CD Service Accounts and by itself.

The service accounts that need to assume this role are:

- argocd-application-controller

- argocd-applicationset-controller

- argocd-server

If we create a rolearn:aws:iam::for this purpose, the following is an example trust policy suitable for this need. Ensure that the Argo CD cluster has an IAM OIDC provider configured.:role/argocd-manager

Get the OIDC provider URL and Cluster Certificate authority from EKS management in AWS Management Console

The Argo CD management role (arn:aws:iam::-manager in our example) additionally needs to be allowed to assume a role for each cluster added to Argo CD.

As stated, each EKS cluster added to Argo CD should have its corresponding role. This role should not have any permission policies. Instead, it will be used to authenticate against the EKS cluster’s API. The Argo CD management role assumes this role, and calls the AWS API to get an auth token. That token is used when connecting to the added cluster’s API endpoint.

To grant access for the argocd-deployer role so a cluster can be added to Argo CD, we should set its trust policy to give the Argo CD management role permission to assume it. Note that we’re granting the Argo CD management role permission to assume this role above, but we also need to permit that action via the cluster role’s trust policy.

And after that update the permission policy of the argocd-manager role to include the following:

Step 3. Configure Cluster Credentials for the second cluster and modify argocd-cm

Now, after everything has been configured, add these values to your ArgoCD ConfigMap or (like in our case) update the ArgoCD Helm chart values to include this configuration

Note that the CA data has been securely put to the SSM Parameter Store and fetched via aws_ssm_parameter data source.

Step 4. Add the EKS Access Entry for each cluster

To finalize a connection both clusters require an Access Entry within each EKS cluster added to Argo CD that gives the cluster’s role RBAC permissions to perform actions within the cluster. You can either edit the access entries manually via EKS management in AWS Management Console or via Terraform.

Create an access entry for each role, including role ARN in Principal field, i.e. arn:aws:iam:: for cluster 2, and arn:aws:iam:: for cluster 1.

Be sure to add AmazonEKSClusterAdminPolicy in the next window using the “Add policy” button.

Step 5. Finalizing & verification

After changing the ArgoCD CM and triggering Terraform run make sure that everything has been updated within ArgoCD Helm deployment. Verify that ArgoCD Service Accounts have been received with the new IAM role ARN.

If everything has been applied correctly, you need to restart the ArgoCD deployments using:

- kubectl rollout restart deployment argo-cd-argocd-server -n argocd

- kubectl rollout restart deployment argo-cd-argocd-applicationset-controller -n argocd

- kubectl rollout restart deployment argo-cd-argocd-application-controller -n argocd

To verify inter-cluster connectivity, go to the ArgoCD UI and follow to the Clusters panel. There you can verify that Cluster credentials have been configured correctly, and also verify the connection status, where, if something goes wrong, you will receive a concise error message that will help you with troubleshooting.

In the CLI > cluster list output you can see it like this:

If the connection status is Successful, that means that you can now deploy resources of your applications to the second cluster! You can use this cluster in a destination configuration of your applications this way:

This concludes the tutorial! Also be sure to check out the Github repository which contains all of the source code that was shown in the article here: Explore the full repo & Terraform examples on GitHub

Conclusion

Managing multiple Amazon EKS clusters with ArgoCD using IAM Roles for Service Accounts (IRSA) offers a secure, scalable, and cloud-native approach to continuous deployment in AWS environments. By leveraging IRSA, you ensure fine-grained access control without hardcoding AWS credentials, aligning with best practices for cloud security and governance.

This setup enables ArgoCD to seamlessly authenticate with and deploy to multiple clusters, even across different AWS accounts, while keeping operations centralized and auditable. By combining ArgoCD with IRSA and IAM-based access control, teams can scale GitOps securely across environments — with centralized control, no static credentials, and full auditability. If you’re building a secure GitOps platform on AWS, this architecture is a proven foundation

If you have any thoughts, questions or issues, feel free to share them in the comments. Let’s make our clusters secure and easy-to-manage!

Scaling GitOps across AWS accounts? Let’s make it effortless.

Talk to Dedicatted — your cloud, our mission.