When we talk about Artificial Intelligence in healthcare, the first thing that comes to mind is usually accuracy. We want the model to predict correctly, whether it’s diagnosing eye diseases, classifying scans, or detecting early signs of Alzheimer’s.

But here’s the truth I learned in my project is accuracy alone is not enough.

The Challenge I Faced

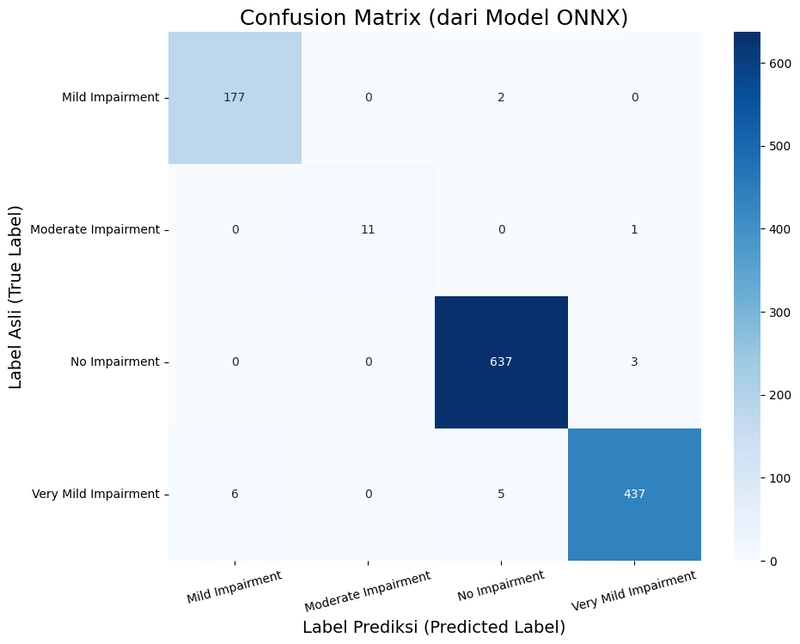

In my Alzheimer’s early detection project, the model was performing well on paper.

Laporan Klasifikasi (Classification Report):

precision recall f1-score support

Mild Impairment 0.97 0.99 0.98 179

Moderate Impairment 1.00 0.92 0.96 12

No Impairment 0.99 1.00 0.99 640

Very Mild Impairment 0.99 0.98 0.98 448

accuracy 0.99 1279

macro avg 0.99 0.97 0.98 1279

weighted avg 0.99 0.99 0.99 1279

------------------------------------------------------

Matthew's Correlation Coefficient (MCC): 0.9781

------------------------------------------------------

The numbers looked impressive, but there was still one big question:

How can we trust what the AI sees?

Doctors won’t just accept a probability score. Patients and their families won’t feel reassured just by a number. They need to know why the model made that decision.

Discovering Explainability with Grad-CAM

That’s where I explored Grad-CAM (Gradient-weighted Class Activation Mapping).

Don’t worry, it’s not as complicated as it sounds. Grad-CAM creates a heatmap that highlights the regions of an image the model focuses on when making a prediction.

In other words, it turns the “black box” into something more transparent and human-readable.

Before and After Grad-CAM

In my Alzheimer’s project, the difference was clear:

Before

The model predicted “Mild Demented” with high confidence, but I had no way to explain why.

After Grad-CAM

The heatmap showed exactly which parts of the brain scan the AI considered most important. And more importantly they were the medically relevant regions linked to early Alzheimer’s symptoms.

That small shift made a big difference. Suddenly, the model wasn’t just a silent judge giving out labels. It became a tool that doctors could actually discuss, question, and trust.

What I Learned

This project taught me a valuable lesson:

- Accuracy is powerful, but explainability is what builds trust.

- In sensitive areas like healthcare, trust matters as much as performance.

- Tools like Grad-CAM are not just technical tricks, they are bridges between AI researchers and medical professionals.

Final Thoughts

Working on this project reminded me why I got into AI research in the first place: not just to build models, but to build models that people can trust and use.

Explainable AI is not optional anymore. It’s the key to making AI truly impactful in real life especially in areas that touch human health.

See the full notebook of Alzheimer Detection here: https://www.kaggle.com/code/hafizabdiel/alzheimer-classification-with-swin-efficient-net

If you’re curious about my other AI projects, you can find them here: http://abdielz.tech/