tl;dr

- Over the weekend, I put Cursor, Windsurf, and Copilot (in VS Code) to the test using GPT-5.

- Tested in both greenfield (starting a project, like our landscape, from scratch) and brownfield scenarios (working with the existing codebase).

- We leaned into a spec-first development approach (more about this here).

- All 3 IDEs got the job done. The real differences show up in workflow ergonomics, UI polish, and how much hand-holding each agent needs.

- My recommendation is to play with these IDEs yourself, and pick your own winner based on your taste and team norms.

- Think of this as a field journal from a dev, not a lab benchmark (test window: Aug 22–24, 2025).

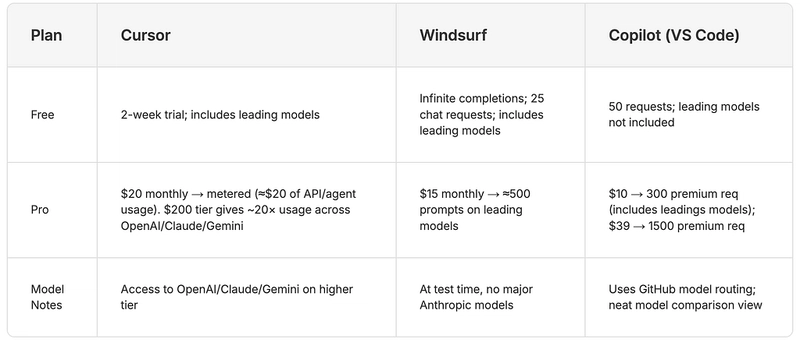

Pricing at a glance

Greenfield exploration: Build a project from scratch with a spec

I’ve prompted each tool to leverage this greenfield-spec.md (describing architecture, basic MERN stack scaffold) and then asked it to implement the spec, iterate, and adjust tests. In my observations, all specs produced by all three were very similar and all delivered working code.

On the UI/UX experience, Cursor felt the most professional in flow/explanations, but once refused to auto-build the project from spec. When it did build, it created a clean structure + tests and adjusted correctly as I changed the spec.

I also appreciated how Cursor usefully shows % of context consumed (~400k token total context with 272k input + 128k output). As for inline speed: Cursor ≈ Copilot > Windsurf . Though fast, Copilot’s in-line refactoring fell short to Windsurf and Cursor’s output.

When running the spec, Windsurf went full send. It created the whole project structure automatically (folders/files) without extra prompting. Windsurf’s chat pane made it easy to distinguish model “thinking”, command runs, and issues.

Copilot (VS Code) mostly showed file contents in chat with path hints but didn’t immediately write an on-disk tree. I did appreciate Copilot’s in-IDE browser preview though, which was delightful for quick checks.

For testing, Windsurf and Cursor’s generated tests passed on first run in my sample. Copilot authored the strongest tests with granular mocking boundaries and edge case coverage. Though I had to wrestle a bit to get them passing, the self-contained modules made failures immediately traceable to specific component behaviors.

If you’re enjoying this content, you might enjoy this 1-pager newsletter I share with over 7,000+ AI native devs. Stay ahead, and get weekly insights here.

Brownfield exploration: extend a legacy codebase

*Getting started * : Upon running locally, Copilot got the server up first, followed by Windsurf second and Cursor third — though notably, Cursor created a new .env.local instead of finding the right one, which slowed me down.

Codebase explanation : When it came to understanding the existing code, Windsurf explained the codebase exceptionally well, and the play on response format/highlights made it the best experience of the 3. That said, Cursor and Copilot were also able to read and explain legacy code effectively.

New feature (asked all to add a tool detail page + schema comparison across tools): All three successfully built from the brownfield-spec.md and produced sensible tests that ran well. This reinforced an important lesson: the clearer your spec, the more concrete the result.

(subtle PostHog lazy-init bug): Here, Windsurf and Cursor diagnosed it faster, while Copilot missed it in my run. It’s important to highlight that the bug comments were not added in the file (that would have been too easy 🙃).

( scripted request across multiple files): All 3 executed the steps and staged changes properly, though with different approaches. Copilot required more approvals (e.g., 7 terminal prompts vs. Windsurf’s 3 and Cursor’s 1), which is great for caution but slower for flow. Interestingly, Copilot would sometimes say it’s “been working on this problem for a while” and stall, whereas the others tended to keep iterating until done.

UI & DX details that matter

Overall, Windsurf > Cursor > Copilot for terminal/chat integration. Copilot runs commands outside the chat pane, which unfortunately breaks the narrative thread for me. Meanwhile, Cursor’s default look and progress indicators feel the most cohesive, Windsurf communicates what’s happening the best, and Copilot nails browser-in-IDE and markdown rendering.

On context and memory: Windsurf’s “just remembers” feel is particularly strong for memory and context retention. Cursor gets there with rules/notes but will lose thread in long sessions, while Copilot keeps things simpler but consequently more ephemeral.

Notable touches:

- Windsurf can continue proposing changes in the background while you review staged diffs — a nice touch for maintaining flow.

- Cursor’s multi-file edit flow is strong, though I’d love a nudge when I forget I’m in “Ask” vs. “Agent” mode.

- Copilot’s “Ask” mode sometimes defaults to doing rather than explaining; additionally, starting a new chat ends the current edit session.

- I hit one infinite loop with Copilot (malformed ); a restart fixed it → but it made me wonder: could this have been resolved without my intervention?”

Implementation Results, Key Takeaways.

So… who should use what?

All three experiences ride on VS Code — Windsurf and Cursor are forks; Copilot runs inside VS Code. That means capability deltas may be subtle: same keyboards, similar models, similar APIs. The differentiation shows up in micro-interactions, like how the agent plans, how much it explains, how the terminal/chat loop flows, and how well the UI helps you stay oriented. If you’re expecting a jaw-dropping, orders-of-magnitude difference, that wasn’t my experience. In my opinion, the small product details and moments of delight are what choose your daily driver.

That said, I found that each IDE may come in use depending on your use case. This is a lightly opinionated take, not gospel:

- Cursor → You care about polish, tight multi-file edits, and a feeling that the agent understands when to execute vs. chat. Great for folks who want structure and speed in daily flow. This may work best for senior/full-stack IC at a startup or small team.

- Windsurf → You value context retention and a workflow-first UI that narrates what’s happening. Strong pick for larger codebases where “not losing the thread” is key. I can see a staff, Principal engineer, or maintainer working in a large/long-lived repo leveraging Windsurf.

- Copilot (VS Code) → You want trust and a more human-in-the-loop vibe. Company teams already in the Microsoft/GitHub ecosystem will feel right at home. I think this is a fit for team lead or IC in GitHub-standardized orgs, who prefer trusted default and governance.

It’s important to note that this exercise comes with several limitations, including (and not limited to) the complexity of the builds, the test coverage, the size of the codebase, etc. That’s why I recommend experimenting with these tools yourself: you’ll want to choose the one that best matches your (or your team’s) needs.

Finally, the current status quo is only ephemeral. Each IDE may develop defensibility in how quickly they ship their features. Cursor’s own in-line edits, as an example, have been developed in-house and were clearly felt throughout this experiment. It will be interesting to observe the pace of feature releases from these three tools. When I converse with engineers, the consensus leans toward Cursor demonstrating stronger execution capabilities, while Copilot appears to be slower in comparison. Meanwhile, given the recent tumultuous Windsurf acqui-hire and the accompanying leadership changes, we may see a shift in its release cadence.

Closing thoughts: A missing spec-driven feature

Despite their capabilities, none of these IDEs fully embrace spec-driven development as a first-class citizen (unlike the recent release of Kiro).

While they can generate and work from specs, the workflow remains bolted-on rather than native — you’re still manually adding your specs, losing track of which code implements which requirements, and lacking formal verification that implementations match specifications. Memory and context management, though improving, remains ad hoc across all three; they remember recent edits but struggle to maintain architectural decisions or cross-session constraints.

Test and mock generation quality varies wildly depending on how you prompt, with no systematic approach to coverage or edge cases. The gaps become even more glaring: no audit trails for AI-generated code changes, absent cost attribution per dev or project, and no integration with existing governance workflows.

These tools lack team-wide consistency enforcement — each developer’s AI assistant learns different patterns, creating style drift across the codebase. There’s no way to enforce company-specific architectural patterns, security requirements, or compliance rules at the AI level.

This points to non non-trivial opportunity for next-gen IDEs where specs aren’t just documentation but executable contracts, where AI agents understand and preserve organizational standards, and where generated code comes with provenance and accountability. If you are interested in learning more about the interesting projects built around spec-driven development, you can search find them on the Landscape, the guide to the AI development ecosystem.

Originally published at https://ainativedev.io.