Introduction

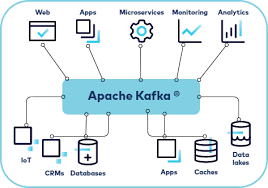

Apache Kafka is an open-source, distributed event streaming platform designed for high performance data pipelines, streaming analytics and data integration. Think of it as a high speed message hub for your data. It lets applications publish, store and subscribe to streams of records in real-time.

Key concepts in Kafka

1. Producer

An application that sends messages to Kafka topics.

2. Consumer

An application that reads messages from Kafka topics.

3. Topic

A category or feed name to which records are sent. Think of this as a channel.

4. Broker

A Kafka server. Multiple brokers form a Kafka cluster.

5. Kafka Cluster

A group of Kafka brokers working together.

Kafka Use case

Imagine an e-commerce platform:

-

Producers – Checkout service, inventory services, payment gateway.

-

Kafka – Handles all events.

-

Consumers – Analytics dashboards, fraud detection systems and email notifications.

Conclusion

Apache Kafka is a backbone for real-time data streaming.