You’ve built an amazing AI voicebot with GPT-4 and Whisper. It works perfectly in testing. Then you plug it into your production VoIP system and:

❌ 3-second delays between responses

❌ Calls drop at 50+ concurrent sessions

❌ Audio quality issues during AI processing

The voicebot isn’t broken. Your VoIP infrastructure is.

Why Legacy Systems Fail with Voicebots

Modern AI voicebots need:

- <200ms latency for natural conversations

- Real-time bidirectional streaming (not traditional IVR)

- Voicebot connectors to bridge VoIP ↔ AI APIs

- Scalable architecture for concurrent AI sessions

Your 10-year-old FreeSWITCH setup? Built for basic call routing, not real-time AI.

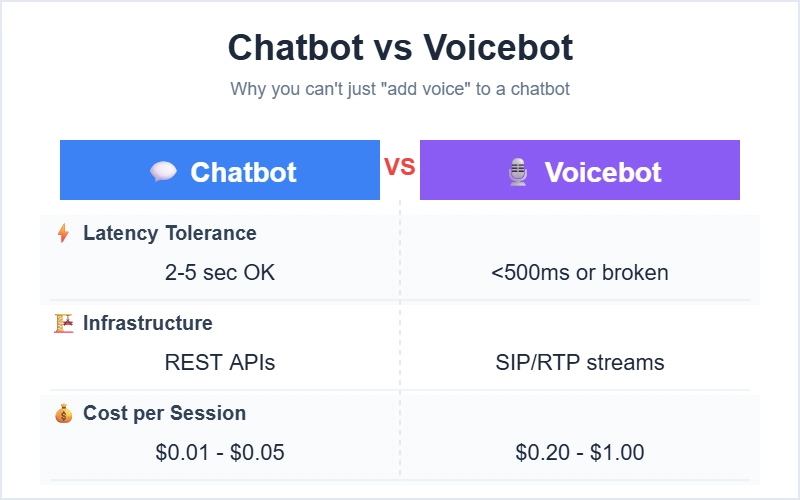

Chatbot ≠ Voicebot

What “Voicebot-Ready” VoIP Looks Like

Core components:

Caller → SIP Gateway → Voicebot Connector → AI APIs

↓

Audio Processing

Session Management

Fallback Logic

Modern stack:

- Kamailio for SIP routing (10K+ calls/sec)

- FreeSWITCH for media processing

- Custom connector (Node.js/Python microservice)

- Cloud AI (Azure Speech, OpenAI, Google)

- Redis for session state

Results:

✅ 2000+ concurrent voicebot sessions

✅ Sub-300ms end-to-end latency

✅ Horizontal scaling

✅ AI provider failover

Upgrade Signs

Time to modernize if you’re seeing:

- Planning AI voicebot integration

- Maxed at <500 concurrent calls

- No API support for integrations

- End-of-life software versions

Quick Migration Path

- Phase 1: Add voicebot connector (10% traffic test)

- Phase 2: Upgrade media layer

- Phase 3: New SIP routing (parallel run)

- Phase 4: Full migration

- Timeline: 3-6 months

If you’re building voicebots in 2025, your VoIP infrastructure isn’t just infrastructure—it’s part of your AI product experience.

Building AI voice solutions? What challenges have you faced? 👇