This is the third article in our series, where we design a simple order solution for a hypothetical company called Simply Order. The company expects high traffic and needs a resilient, scalable, and distributed order system.

In previous lectures:

we built the core services — Order, Payment, and Inventory — and discussed the possible ways of implementing distributed transactions across multiple services. Then we designed and implemented our workflow.

In this and the next lessons, we will add data persistence to our application. We’ll start with the Order Service, using PostgreSQL to persist our orders.

But before diving into creating tables, let’s remind ourselves how the Order Service currently looks:

public UUID createOrder(CreateOrderRequest request)

public UUID createOrder(CreateOrderRequest request){

UUID orderId = UUID.randomUUID();

BigDecimal total = BigDecimal.ZERO;

List<Order.Item> items = new LinkedList<>();

for (var it : request.items()) {

total = total.add(it.price().multiply(BigDecimal.valueOf(it.qty())));

items.add(new Order.Item(it.sku(), it.qty(), it.price()));

}

Order order = new Order(orderId, request.customerId(), Order.Status.OPEN, total, items);

orders.put(orderId, order);

OrderWorkflow wf = client.newWorkflowStub(

OrderWorkflow.class,

WorkflowOptions.newBuilder()

.setTaskQueue("order-task-queue")

.setWorkflowId("order-" + orderId) // This is saga id

.build());

WorkflowClient.start(wf::placeOrder, OrderWorkflow.Input.from(order, request));

return orderId;

}

The service creates an ‘Order’ object and then starts the orchestrated order transaction.

We will now update the part responsible for creating the order to store it in the database instead of in memory.

⚠️ Dual-write inconsistency

Because the database and Temporal broker are independent systems, the operation is not atomic.

This can lead to inconsistent states:

- DB Ok, Broker fails: We create an order in the database (status = OPEN), but no workflow transaction is started — other services remain unaware → stale order.

- DB fails, Broker OK: The order is not persisted, but a workflow is started → a ghost order exists in the system.

🤔 Using a 2PC (Two-Phase Commit) transaction could solve this, but it’s slow, complex, and impractical for microservices.

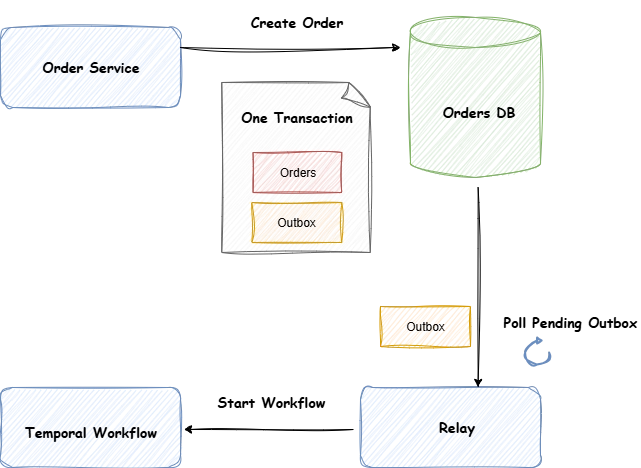

Outbox Pattern

When we face such a problem, the Outbox pattern can be our savior.

It follows a very simple idea:

- When we create the order in the database, we also create another record in an outbox table corresponding to that order event.

- Both records are committed in one transaction — they either succeed or fail together, ensuring consistency.

- A separate relay process then polls the outbox table and starts the workflow independently.

This guarantees that the distributed transaction will eventually be started, even if the broker was temporarily unavailable.

Outbox flavors

There are two main ways to implement the Outbox pattern:

Outbox with polling Relay

Flow

1- The application writes the business record (i.e., Order) and the outbox record in one transaction.

2- A poller/relay (scheduled job) scans the outbox table for new records.

3- The relay starts the workflow and marks the record as SENT.

Pros

- Simple mechanism with no additional infrastructure layer.

Cons

- Polling adds load to the database.

- You own the relay, so you must handle retries, backoff, and failures.

- The database must support transactions.

Outbox with CDC/Log Tailing

CDC Change Data Capture: A method to detect row-level changes in a database (inserts, updates, deletes) and stream them to other systems in near real time — without touching application code. Often implemented by reading the database’s transaction log.

transaction Log: An append-only record of every database change (insert, update, delete) in exact order, allowing the database to replay, undo, replicate, or stream changes.

Flow

1- Application write business record i.e Order + Outbox record in DB in one Transaction

2- A CDC tool tails the DB’s transaction log and streams outbox inserts to a stream service i.e Kafka.

3- A consumer can listen to changes and start the workflow.

Pros

- No polling overhead on the DB.

- Very low latency and operationally robust once set up.

Cons

- Requires CDC infrastructure (Kafka Connect, Debezium, connectors, monitoring, etc.).

Our Approach

CDC is a great fit when you already have a streaming infrastructure (e.g., Kafka) or when you need near real-time event processing.

But this is not our current case.

So, we’ll implement Outbox with Polling Relay to handle this consistency problem between the database and the Temporal workflow.

Wrap-up

In this lesson, we explored one of the most important reliability patterns in distributed systems — the Outbox pattern.

We started with the dual-write problem and saw why direct DB + broker writes can lead to inconsistent states.

Then, we learned how the Outbox pattern guarantees atomicity and reliability by coupling data persistence with event publishing — either via a polling relay or CDC/log tailing approach.

In our Simply Order project, we’ll go with the polling relay implementation, since it’s simpler and requires no additional infrastructure.

➡️ In the next lesson, we’ll dive into the actual implementation:

- Create the outbox table

- Persist events along with orders in one transaction

- Build a simple polling relay to trigger the Temporal workflow reliably

Stay tuned — that’s where we’ll make reliability real. 💪