This is a part of a series that explores practical patterns to protect your system:

- Concurrency and Row Versioning – Part 1

- Concurrency and Transactional Row Versioning – Part 2 ⭐

- Concurrency and API Resource Locks – Part 3

- Concurrency and Queues – Part 4

Why is a transaction needed?

In some cases, you need to modify several resources within a single operation. A transaction ensures that these updates are treated as one atomic unit — either all changes succeed together, or none are applied (they are rolled back).

SQL transaction

Most modern SQL servers support transactions.

BEGIN;

# Step 1 - read

SELECT * FROM users where id = 42

SELECT * FROM subscriptions where user_id = 42

# Step 2 - your logic

# Step 3 - modify resources

UPDATE users

SET name="Albert", version = version + 1

WHERE id = 42 AND version = 7;

UPDATE subscriptions

SET plan = 'pro', version = version + 1

WHERE id = $SUBSCRIPTION_ID AND version = 3;

COMMIT;

The user row modification will be automatically rolled back if the subscription update fails, and vice versa.

Is SQL transaction heavy?

A decade ago, SQL transactions would immediately downgrade the server’s performance. The highest isolation level, SERIALIZABLE, provides consistent checks for concurrent reads and writes but adds locks on rows and tables. Table locks are cumbersome, especially for READ locks, when other concurrent fetch queries should wait until the table is unlocked.

Today, SQL servers represent the art of engineering and have gained huge performance in all aspects.

First, databases improved their algorithms to use a snapshot of data per transaction based on the “Multiversion Concurrency Control (MVCC)” idea introduced in 1981 by Philip A. Bernstein and Nathan Goodman. All queries and modifications run against the database snapshot inside the transaction (requires DB snapshot isolation setting). Concurrent requests do not introduce changed records, and no locks are required.

Second, in 2008, Cahill, Röhm, and Fekete published a revolutionary idea in the article “Serializable isolation for snapshot databases.”. It is possible to build an execution graph of all concurrent transactions. If there is a cycle in the graph, there is a resource conflict, and transactions should be cancelled except for one. The beauty is that there is no need for heavy locks.

Moreover, the algorithm provides complete Serializable Snapshot Isolation (SSI). For example, one transaction calculates the user’s credit balance and then wants to write a fact of a 50 USD item purchase. Meanwhile, the second transaction adds a new ledger record of -1000 USD in the middle, making the balance and new payment unavailable. For decades, this kind of scenario was almost impossible to track in databases. A new record was added to or deleted from the table you recently queried in a transaction.

BEGIN;

# Step 1

SELECT SUM(value) as balance FROM ledger_entries

where user_id = 42;

# Concurrent Step 2 by another transaction

# INSERT INTO ledger_entries VALUES(-1000, 'USD');

# Step 3 - add purchase

# Does `balance` have the right value?

IF balance >= 50 THEN

INSERT INTO ledger_entries VALUES(-50, 'USD');

# INSERT INTO purchased ...

END IF;

COMMIT;

This is no longer the issue with modern SQL servers implementing Cahill, Röhm, and Fekete’s algorithm. With special settings, the database tracks whether the table query result SELECT SUM(value) as balance FROM ledger_entries where user_id = 42 has been changed by another transaction during the execution. It rolls it back if needed without any lock mechanism.

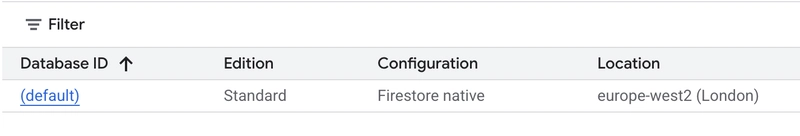

Firestore DB transaction

The SQL algorithm performs efficiently on a single server. However, it does not scale effectively in highly distributed databases like Firestore.

Firestore supports transaction isolation, but only at some level. It cannot handle the new row anomaly example. This is still a fascinating fact because document databases were created without transactions in mind: store unstructured data per document and support distributed storage.

Let us review a Firestore transaction example.

await db.runTransaction(async t => {

// Step 1 - read

const userRef = db.collection('users').doc('42');

const userDoc = await t.get(userRef);

const subscriptionRef = db.collection('subscriptions').doc(userDoc.data.subscriptionId);

const subscriptionDoc = await t.get(subscriptionRef);

const ledgerEntries = await db.collection('ledger').where('status', '==', 'success').get();

// Step 2

// your logic

// Step 3

await t.update(userRef, {

name: 'Albert'

});

await t.update(subscriptionRef, {

plan: 'pro'

});

});

First, Firestore works against a snapshot per transaction, implementing the MVCC principle.

Second, it automatically tracks all fetched document timestamps (versions) against concurrent changes. Similar to what we did in Concurrency and Row Versioning – Part 1 with lastUpdateTime, it just works out of the box, so no need to specify it explicitly. In this example, we initially fetched userDoc, subscriptionRef, and ledgerEntries. Firestore will check if another update has changed them concurrently during the transaction application. This is also true for all fetched ledgerEntries; even though we do not modify them in the transaction. Firetore, on the other hand, as mentioned, cannot track transactionally new inserts or deletes.

There is the main limitation for a Firestore transaction code: all fetch operations should go before modifications. The following is incorrect:

await db.runTransaction(async t => {

// ❌ wrong, modification should go at the end of Firestore transactions

await t.update(subscriptionRef, {

plan: 'pro'

}

// ❌ wrong, read operations should go before modifications

const doc = await db.collection('users').doc('42').get();

});

Does Firestore use locks in transactions?

Firebase has two common scenarios in mind.

The first occurs when users update the Firestore DB directly from devices via mobile and web applications. Users are expected to likely update only their data and rarely have concurrent conflicts. For mobile clients, Firestore uses a lockless optimistic concurrency approach. All fetched row versions are tracked for changes. Locking documents in a high-latency environment would cause too many data contention failures.

Another scenario is when a backend updates the database. In this case, transaction conflicts can occur very often. It is unwise to make a lot of retries with row versioning. The standard mode (default) uses a pessimistic concurrency approach with read document locks.

When a transaction locks a document, it prevents other transactions, batched writes, and regular (non-transactional) writes from modifying that document. Any concurrent write operations must wait in a queue until the transaction releases its lock. If we do not update the same document too frequently, this approach drastically eliminates the number of transaction retries compared to optimistic concurrency.

A transaction is a powerful concept that should be used carefully.

In the following article, we consider a solution for REST APIs to protect third-party service states against concurrent requests.