Docling: The Secret to High-Fidelity RAG Data

🚀 Introduction: The RAG Recipe for Success using OpenSearch and Docling

Retrieval-Augmented Generation (RAG) is transforming how Large Language Models (LLMs) access and utilize private or real-time data. But the quality of your LLM’s answer is only as good as the context it retrieves — and that starts with great document processing.

Many RAG pipelines fail because they treat all documents as simple text, blindly slicing pages into fixed-size chunks. This overlooks crucial structure (tables, headings, figures) that an LLM needs for grounded answers.

Enter Docling and OpenSearch. We’re going to show you how to use Docling to create structurally-aware, high-quality data and use OpenSearch’s hybrid search power to make your RAG pipeline smarter, more accurate, and production-ready.

Docling: The Secret to High-Fidelity RAG Data

Docling is a versatile open-source tool built specifically to parse, structure, and prepare complex documents (like PDFs, reports, and manuals) for RAG. It moves beyond simple text extraction to a rich, structured format.

Why Docling is a RAG Game-Changer:

- Structural Intelligence: Docling intelligently detects and preserves the document hierarchy — headings, tables, lists, and figures. This means an LLM gets chunks that are not just text, but are annotated with surrounding context .

- Hierarchical Chunking: Instead of one-size-fits-all, Docling enables hierarchical chunking. It can create chunks at multiple granularities: a full section, a subsection, or even a single table. This is critical for improving retrieval recall.

- Rich Metadata: It extracts and attaches rich metadata to every chunk, such as page numbers, section titles, and document IDs. This metadata is key for filtered search in OpenSearch, allowing your RAG system to be precise.

Docling Features as of “20 October 2025”

- 🗂️ Parsing of multiple document formats incl. PDF, DOCX, PPTX, XLSX, HTML, WAV, MP3, VTT, images (PNG, TIFF, JPEG, …), and more

- 📑 Advanced PDF understanding incl. page layout, reading order, table structure, code, formulas, image classification, and more

- 🧬 Unified, expressive DoclingDocument representation format

- ↪️ Various export formats and options, including Markdown, HTML, DocTags and lossless JSON

- 🔒 Local execution capabilities for sensitive data and air-gapped environments

- 🤖 Plug-and-play integrations incl. LangChain, LlamaIndex, Crew AI & Haystack for agentic AI

- 🔍 Extensive OCR support for scanned PDFs and images

- 👓 Support of several Visual Language Models (GraniteDocling)

- 🎙️ Audio support with Automatic Speech Recognition (ASR) models

- 🔌 Connect to any agent using the MCP server

- 💻 Simple and convenient CLI

🔎 OpenSearch: The RAG Powerhouse

OpenSearch is a widely adopted, open-source search and analytics suite that shines as a vector database for RAG. It provides the performance, scalability, and, most importantly, the advanced search features necessary for production-grade AI.

Leveraging OpenSearch for Retrieval:

- Vector Store for Semantic Search (k-NN): OpenSearch efficiently stores the high-dimensional vectors (embeddings) generated from your Docling-processed chunks. Its native k-Nearest Neighbor (k-NN) support ensures lightning-fast semantic similarity search.

- Keyword Search (BM25): Alongside vectors, OpenSearch provides world-class traditional keyword search (BM25). This is still vital for finding specific terms, names, or codes that often get lost in vector space.

- Hybrid Search and RRF: The ultimate win is OpenSearch’s support for Hybrid Search. It seamlessly combines both vector and keyword search results, then uses an algorithm like Reciprocal Rank Fusion (RRF) to produce a single, optimally re-ranked list of context documents. This maximizes the chance of retrieving all relevant information.

🧩 The Combined Pipeline: Docling + OpenSearch RAG

This is where the magic happens. The synergy between Docling and OpenSearch creates a robust RAG pipeline:

| Step | Component | Action | Benefit for RAG |

| ----------------------- | ---------------------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

| **1. Data Preparation** | **Docling** | Parses documents, performs hierarchical chunking, and enriches chunks with structural metadata. | Creates smaller, context-rich chunks, avoiding the "lost in the middle" problem. |

| **2. Ingestion** | **OpenSearch Ingest Pipeline** | Ingests Docling's structured output, generates text embeddings, and indexes both the text/metadata and the vectors. | A single, scalable system stores both search types for hybrid retrieval. |

| **3. Retrieval** | **OpenSearch Hybrid Query** | Executes a simultaneous keyword and vector search over the index, then uses RRF to combine and re-rank the results. | **Maximum Relevance:** Catches both semantically similar context and exact keyword matches. |

| **4. Generation** | **LLM (via LlamaIndex/LangChain)** | Receives the user query and the highly-relevant, structured context from OpenSearch to generate an answer. | **High Accuracy & Grounding:** Provides a precise, verifiable answer based on the best possible context. |

Implementation

The code implementation presented in the following section directly mirrors the example provided in the Docling Project documentation, specifically replicating the complete logic and steps from the dedicated NoteBook. (A direct link to the original source code is available in the “Links” section below for reference.)

Excerpt of the original code;

This is a code recipe that uses OpenSearch, an open-source search and analytics tool, and the LlamaIndex framework to perform RAG over documents parsed by Docling.

In this notebook, we accomplish the following:

📚 Parse documents using Docling’s document conversion capabilities

🧩 Perform hierarchical chunking of the documents using Docling

🔢 Generate text embeddings on document chunks

🤖 Perform RAG using OpenSearch and the LlamaIndex framework

🛠️ Leverage the transformation and structure capabilities of Docling documents for RAG

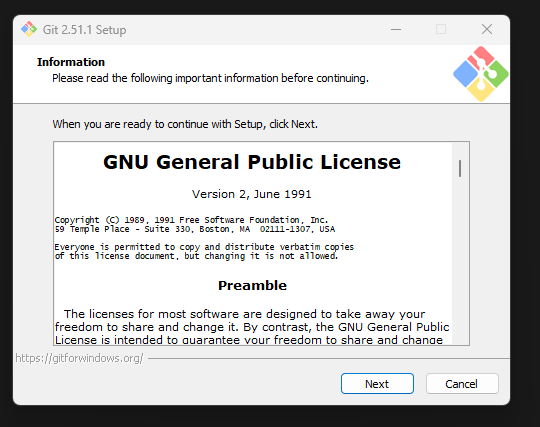

👣 The steps hereafter are to transform the NoteBook into a Python application and reveals the actions I had to take to make it work on my environment.

The environment elements

- Ollama

- Podman

- Embedding model: granite-embedding:latest — 62MB

- LLM: granite4:latest — 2.1GB

The code

- As usual a virtual environment is recommended 🈸

curl -LsSf https://astral.sh/uv/install.sh | sh

uv venv

source .venv/bin/activate

uv pip install notebook ipywidgets docling llama-index-readers-file llama-index-readers-docling llama-index-node-parser-docling llama-index-vector-stores-opensearch llama-index-embeddings-ollama llama-index-llms-ollama

- A container image of OpenSearch is needed and should be pulled. I used Podman Desktop and Podman Engine.

podman run \

-it \

--pull always \

-p 9200:9200 \

-p 9600:9600 \

-e "discovery.type=single-node" \

-e DISABLE_INSTALL_DEMO_CONFIG=true \

-e DISABLE_SECURITY_PLUGIN=true \

--name opensearch-node \

-d opensearchproject/opensearch:2.19.3

- And the Ollama elements 🔩

ollama pull granite-embedding

ollama run granite4

⚠️ Troubleshooting beforehand to make the code work locally: I had to download models locally. I downloaded them in “./models” of my current code folder!

- The code adapted from the original Notebook

import os

import logging

import time

from pathlib import Path

import gc

from typing import List

import requests

from llama_index.core import SimpleDirectoryReader, StorageContext, VectorStoreIndex

from llama_index.core.schema import TransformComponent

from llama_index.core.vector_stores.types import VectorStoreQueryMode

from llama_index.embeddings.ollama import OllamaEmbedding

from llama_index.llms.ollama import Ollama

from llama_index.node_parser.docling import DoclingNodeParser

from llama_index.readers.docling import DoclingReader

from llama_index.vector_stores.opensearch import (

OpensearchVectorClient,

OpensearchVectorStore,

)

from docling_core.transforms.chunker import HierarchicalChunker

from docling_core.transforms.chunker.hierarchical_chunker import (

ChunkingDocSerializer,

ChunkingSerializerProvider,

)

from docling_core.transforms.serializer.markdown import MarkdownTableSerializer

from rich.console import Console

LOCAL_MODELS_PATH = "./models"

DOCLING_ARTIFACTS_ENV_VAR = 'DOCLING_SERVE_ARTIFACTS_PATH'

os.environ[DOCLING_ARTIFACTS_ENV_VAR] = LOCAL_MODELS_PATH

print(f"Set environment variable {DOCLING_ARTIFACTS_ENV_VAR} to: {LOCAL_MODELS_PATH}")

# ----------------------------------------------------------------------

logging.getLogger().setLevel(logging.WARNING)

console = Console(width=88)

OPENSEARCH_ENDPOINT = "http://localhost:9200"

EMBED_MODEL_NAME = "granite-embedding:latest"

GEN_MODEL_NAME = "granite4:latest"

INPUT_DIR = Path("./input/pdfs")

OUTPUT_DIR = Path("./output")

TEXT_FIELD = "content"

EMBED_FIELD = "embedding"

class MetadataTransform(TransformComponent):

"""

Ensures Docling-generated metadata (like large binary hashes) are stringified

to prevent out-of-range integer errors during indexing in OpenSearch.

"""

def __call__(self, nodes, **kwargs):

for node in nodes:

binary_hash = node.metadata.get("origin", {}).get("binary_hash", None)

if binary_hash is not None:

node.metadata["origin"]["binary_hash"] = str(binary_hash)

return nodes

class MDTableSerializerProvider(ChunkingSerializerProvider):

"""Custom serializer provider to render tables as Markdown."""

def get_serializer(self, doc):

return ChunkingDocSerializer(

doc=doc,

table_serializer=MarkdownTableSerializer(),

)

CHUNK_TRANSFORMATIONS_BASE: List[TransformComponent] = [MetadataTransform()]

def wait_for_opensearch(endpoint, max_retries=10, delay=5):

"""Waits until OpenSearch is available."""

print("Waiting for OpenSearch instance to be ready...")

for i in range(max_retries):

try:

response = requests.get(endpoint, timeout=5)

response.raise_for_status()

console.print(f"OpenSearch is running: [green]{response.json().get('tagline')}[/green]")

return

except (requests.exceptions.ConnectionError, requests.exceptions.HTTPError):

print(f"Attempt {i+1}/{max_retries}: OpenSearch not ready, waiting {delay}s...")

time.sleep(delay)

raise ConnectionError("Failed to connect to OpenSearch after multiple retries.")

def clear_index(client, index_name):

"""Utility to clear an OpenSearch index."""

try:

client.clear()

console.print(f"Cleared existing index [yellow]'{index_name}'[/yellow]")

except Exception:

pass

def run_rag_pipeline_for_file(

file_path: Path,

embed_dim: int,

embed_model: OllamaEmbedding,

gen_model: Ollama,

chunk_transformations: List[TransformComponent]

):

"""

Performs the full RAG workflow (Load, Index, Query) for a single document.

Includes explicit cleanup for resource management.

"""

file_name = file_path.name

OPENSEARCH_INDEX = f"rag-docling-index-{file_path.stem.lower()}"

output_file_name = OUTPUT_DIR / f"rag_results_{file_path.stem}.md"

results = [f"# RAG Results for Document: {file_name}\n\n"]

console.print(f"\n[bold magenta]Processing File:[/bold magenta] {file_name}")

console.print(f"-> Index Name: [cyan]{OPENSEARCH_INDEX}[/cyan]")

docling_reader = DoclingReader(export_type=DoclingReader.ExportType.JSON)

dir_reader = SimpleDirectoryReader(

input_files=[file_path],

file_extractor={

".pdf": docling_reader,

},

)

documents = []

try:

documents = dir_reader.load_data()

except Exception as e:

console.print(f"\n[bold red]ERROR during document loading for {file_name}:[/bold red]")

console.print(f"Error: {e}")

console.print("This may indicate Docling model files are missing or malformed in the local path.")

return

if not documents:

console.print(f"[bold red]FATAL ERROR:[/bold red] Document loading failed for {file_name}. No documents loaded. Skipping file.")

return

console.print(f"Loaded {len(documents)} document(s).")

client_default = OpensearchVectorClient(

endpoint=OPENSEARCH_ENDPOINT,

index=OPENSEARCH_INDEX,

dim=embed_dim,

embedding_field=EMBED_FIELD,

text_field=TEXT_FIELD,

auto_create_index=True,

)

clear_index(client_default, OPENSEARCH_INDEX)

vector_store_default = OpensearchVectorStore(client_default)

storage_context_default = StorageContext.from_defaults(vector_store=vector_store_default)

index_default = VectorStoreIndex.from_documents(

documents=documents,

transformations=chunk_transformations,

embed_model=embed_model,

)

console.print(f"Successfully indexed data into OpenSearch index '{OPENSEARCH_INDEX}'.")

QUERY_AI_MODELS = "Which are the main AI models described in this document?"

query_engine_default = index_default.as_query_engine(llm=gen_model)

res_default = query_engine_default.query(QUERY_AI_MODELS)

results.append("## 1. RAG Query (Default Docling Chunker)\n")

results.append(f"### Query: {QUERY_AI_MODELS}\n")

results.append(f"### Response:\n```

{% endraw %}

markdown\n{res_default.response.strip()}\n

{% raw %}

```\n\n---\n")

QUERY_TABLE_PERF = "What are the performance metrics of any reported backend with 16 threads?"

res_table_perf = query_engine_default.query(QUERY_TABLE_PERF)

results.append("## 2. RAG Query (Table Query)\n")

results.append(f"### Query: {QUERY_TABLE_PERF}\n")

results.append(f"### Response:\n```

{% endraw %}

markdown\n{res_table_perf.response.strip()}\n

{% raw %}

```\n\n---\n")

console.print("\n-> Setting up Hybrid Search (RRF) Pipeline...")

RRF_PIPELINE_URL = f"{OPENSEARCH_ENDPOINT}/_search/pipeline/rrf-pipeline"

headers = {"Content-Type": "application/json"}

body = {

"description": "Post processor for hybrid RRF search",

"phase_results_processors": [

{"score-ranker-processor": {"combination": {"technique": "rrf"}}}

],

}

try:

requests.put(RRF_PIPELINE_URL, json=body, headers=headers).raise_for_status()

except requests.exceptions.RequestException as e:

console.print(f"[bold red]WARNING:[/bold red] Failed to create OpenSearch RRF pipeline. Error: {e}")

OPENSEARCH_INDEX_RRF = f"{OPENSEARCH_INDEX}-rrf"

client_rrf = OpensearchVectorClient(

endpoint=OPENSEARCH_ENDPOINT,

index=OPENSEARCH_INDEX_RRF,

dim=embed_dim,

embedding_field=EMBED_FIELD,

text_field=TEXT_FIELD,

# IMPORTANT: search_pipeline enables hybrid search mode

search_pipeline="rrf-pipeline",

auto_create_index=True,

)

clear_index(client_rrf, OPENSEARCH_INDEX_RRF)

vector_store_rrf = OpensearchVectorStore(client_rrf)

storage_context_rrf = StorageContext.from_defaults(vector_store=vector_store_rrf)

index_hybrid = VectorStoreIndex.from_documents(

documents=documents,

transformations=chunk_transformations,

storage_context=storage_context_rrf,

embed_model=embed_model,

)

console.print(f"Successfully indexed data into RRF index '{OPENSEARCH_INDEX_RRF}'.")

QUERY_DOCKERFILE = "Does the project provide a Dockerfile?"

query_engine_hybrid = index_hybrid.as_query_engine(

llm=gen_model,

vector_store_query_mode=VectorStoreQueryMode.HYBRID # This is the key for hybrid search

)

res_hybrid = query_engine_hybrid.query(QUERY_DOCKERFILE)

results.append("## 3. Hybrid RRF Query\n")

results.append(f"### Query: {QUERY_DOCKERFILE}\n")

results.append(f"### Response:\n```

{% endraw %}

markdown\n{res_hybrid.response.strip()}\n

{% raw %}

```\n\n---\n")

with open(output_file_name, "w") as f:

f.write("\n".join(results))

console.print(f"\n[bold green]SUCCESS![/bold green] Results for {file_name} written to [cyan]{output_file_name.resolve()}[/cyan].")

try:

del query_engine_default, index_default, storage_context_default, vector_store_default, client_default

del query_engine_hybrid, index_hybrid, storage_context_rrf, vector_store_rrf, client_rrf

del documents, docling_reader, dir_reader

gc.collect()

except Exception:

pass

def main():

"""

Main entry point to initialize models and iterate over all files in the input directory.

"""

INPUT_DIR.mkdir(parents=True, exist_ok=True)

OUTPUT_DIR.mkdir(parents=True, exist_ok=True)

try:

embed_model = OllamaEmbedding(model_name=EMBED_MODEL_NAME)

gen_model = Ollama(

model=GEN_MODEL_NAME,

request_timeout=120.0,

context_window=8000,

temperature=0.0,

)

except Exception as e:

console.print(f"[bold red]FATAL ERROR:[/bold red] Failed to initialize Ollama models.")

console.print(f"Please ensure Ollama is running and models '{EMBED_MODEL_NAME}' and '{GEN_MODEL_NAME}' are pulled.")

console.print(f"Error: {e}")

return

try:

wait_for_opensearch(OPENSEARCH_ENDPOINT)

except ConnectionError as e:

console.print(f"[bold red]FATAL ERROR:[/bold red] {e}")

return

try:

embed_dim = len(embed_model.get_text_embedding("test query"))

console.print(f"\n[bold]Embedding Dimension:[/bold] {embed_dim}")

except Exception as e:

console.print(f"[bold red]FATAL ERROR:[/bold] Could not get embedding dimension. Is '{EMBED_MODEL_NAME}' model working?")

console.print(f"Error: {e}")

return

SUPPORTED_EXTENSIONS = ['.pdf']

input_files = [f for f in INPUT_DIR.glob('*') if f.suffix.lower() in SUPPORTED_EXTENSIONS]

if not input_files:

console.print(f"\n[bold yellow]WARNING:[/bold yellow] No supported PDF files found in {INPUT_DIR.resolve()}.")

console.print(f"Create a directory '{INPUT_DIR.resolve()}' and place your PDF files inside.")

return

console.print(f"\n[bold]Found {len(input_files)} file(s) to process.[/bold]")

console.print("Starting RAG pipeline for each document...")

for file_path in input_files:

process_document_with_md_tables = False

if process_document_with_md_tables:

node_parser = DoclingNodeParser(

chunker=HierarchicalChunker(

serializer_provider=MDTableSerializerProvider(),

)

)

current_transformations = [node_parser] + CHUNK_TRANSFORMATIONS_BASE

console.print("\n-> Using Markdown Table Serialization for this file.")

else:

node_parser = DoclingNodeParser()

current_transformations = [node_parser] + CHUNK_TRANSFORMATIONS_BASE

console.print("\n-> Using Default Docling Chunker for this file.")

run_rag_pipeline_for_file(

file_path=file_path,

embed_dim=embed_dim,

embed_model=embed_model,

gen_model=gen_model,

chunk_transformations=current_transformations

)

del node_parser

gc.collect()

if __name__ == "__main__":

main()

- Run the code using the python command the output would be logged into a markdown file.

Et voilà ⭐

✅ Conclusion: Build an AI That Doesn’t Hallucinate

Moving beyond basic RAG means focusing on the quality of your retrieval. Docling gives you the high-fidelity, structurally-aware data your RAG pipeline deserves, and OpenSearch provides the high-performance, flexible hybrid search platform to find the absolute best context every time.

Together, they’re the foundation for a scalable, reliable RAG application that provides accurate, grounded, and trustworthy answers.