“In distributed systems, consistency isn’t just a property — it’s a promise.”

Why This Article

Imagine you’re building a small banking application. Users can deposit and withdraw money, check their balances, and expect data accuracy every single time — even if multiple requests hit the system simultaneously. But the moment you deploy it across containers, networks, and replicas, one question starts haunting every architect:

How do we keep data consistent when everything is happening everywhere?

In this tutorial, we’ll explore that question through a hands-on story — from concept to infrastructure — and deploy a Spring Boot + PostgreSQL banking demo across a five-node Kubernetes lab, fully automated with Vagrant. Our goal isn’t to ship production code, but to understand the design thinking behind consistency, locking, and automation.

Most tutorials use Minikube or kind, which are great for learning but limited to single-node simulations.

What if you could spin up a full Kubernetes cluster — control plane, multiple worker nodes, real networking, storage, and ingress — entirely automated and reproducible?

It’s a perfect local lab for experimenting with deployments, storage, and load testing — without relying on cloud services.

What You’ll Learn

-

Build a 5-node Kubernetes cluster using Vagrant and VirtualBox

-

Automate provisioning with Bash scripts

-

Deploy a real Spring Boot + Postgres application

-

Test the rest endpoint using k6 load testing

Build, deploy, and test a real multi-node Kubernetes cluster from scratch — all on your local machine.

Design

The system provides RESTful endpoints for withdrawal and deposit operations, served by a Spring Boot–based API backend. When the API receives a client request, it updates the account balance in a PostgreSQL relational database. To ensure data consistency under concurrent transactions, the system supports both optimistic and pessimistic locking mechanisms.

Both the API backend and the PostgreSQL database are deployed on a Kubernetes cluster comprising five virtual machines:

-

One control plane node for cluster management

-

One edge node for network routing and ingress

-

Two worker nodes hosting the Spring Boot web applications

-

One database node running PostgreSQL

This is a visual representation of the cluster setup:

K8S Architecture Overview

┌─────────────────────────────────────────────────────────────┐

│ Kubernetes Cluster │

├─────────────────────────────────────────────────────────────┤

│ │

│ Control Plane (k8s-cp-01) │

│ └─ IP: 192.168.56.10 │

│ └─ Role: Master node, API server, scheduler, controller │

│ │

│ Worker Nodes: │

│ ├─ k8s-node-01 (192.168.56.11) - tier: edge │

│ │ └─ Ingress Controller, Local Path Provisioner, MetalLB │

│ ├─ k8s-node-02 (192.168.56.12) - tier: backend │

│ │ └─ Spring Boot Application Pods │

│ ├─ k8s-node-03 (192.168.56.13) - tier: backend │

│ │ └─ Spring Boot Application Pods │

│ └─ k8s-node-04 (192.168.56.14) - tier: database │

│ └─ PostgreSQL Database │

│ │

│ LoadBalancer IP Pool: 192.168.56.240-250 │

└─────────────────────────────────────────────────────────────┘

Building the Complete Environment:

The environment will be provisioned using Vagrant, which automates the creation of virtual machines and the setup of the Kubernetes cluster. Once the infrastructure is ready, it will deploy the prebuilt cloud-native Spring Boot web application and the fully configured PostgreSQL database, assembling the complete application service environment.

Prerequisites

The following tools are required on your host machine:

| Tool | Version | Install |

|---|---|---|

| VirtualBox | ≥ 7.1.6 | See install checklist |

| Vagrant | ≥ 2.4.9 | See install checklist |

| RAM | ≥ 13 GB | 3GB for cp + 2 GB per node |

| CPU | ≥ 4 cores | Recommended |

| Network | 192.168.56.0/24 | VirtualBox Host-Only |

Below is the installation command:

# Windows (Chocolatey, run admin PowerShell)

choco install virtualbox vagrant

# Ubuntu/Debian

sudo apt-get update && sudo apt-get install -y virtualbox

# Get latest vagrant from HashiCorp website or apt repo

# Install HashiCorp GPG key

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

# Add HashiCorp repository

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] \

https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt-get install -y vagrant

Step 1: Vagrant

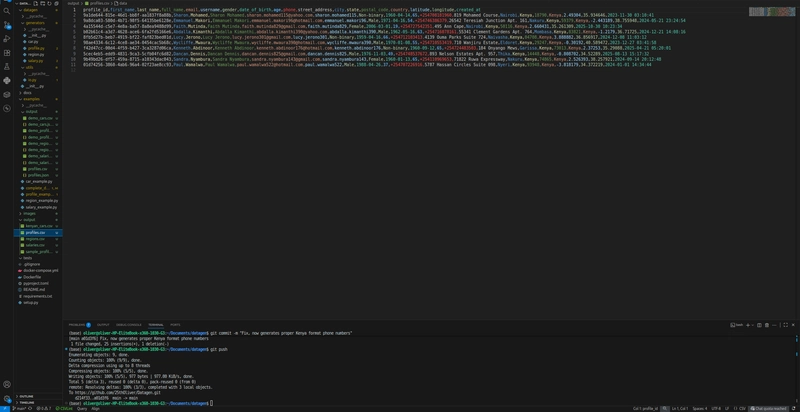

Clone the project

git clone https://github.com/arata-x/vagrant-k8s-bank-demo.git

Project Structure

The outline of the project structure is as follows:

Root:

│ Vagrantfile

│

└─provision

├─deployment

│ │

│ └─standard

│ ├─app

│ │ 10-config.yml

│ │ 20-rbac.yml

│ │ 30-db-deploy.yml

│ │ 40-app-deploy.yml

│ │ 50-services.yml

│ │ 60-network-policy.yml

│ │ 70-utilities.yml

│ │

│ └─infra

│ 10-storage-class.yml

│ 20-metallb.yaml

│

└─foundation

10-common.sh

20-node-network.sh

30-control-panel.sh

40-join-node.sh

50-after-vagrant-setup.sh

join-command.sh

A Vagrantfile is a configuration file written in Ruby syntax that defines how Vagrant should provision and manage a virtual machine (VM). It’s the heart of any Vagrant project—used to automate the setup of reproducible development environments.

Vagrantfile specifies:

-Base OS image (e.g., ubuntu/jammy64) -Resources (CPU, memory, disk) -Networking (port forwarding, private/public networks) -Provisioning scripts (e.g., install Java, Maven, Docker) -Shared folders between host and VM

Below is the content of Vagrantfile

Vagrant.configure("2") do |config|

config.vm.box = "ubuntu/jammy64"

config.vm.synced_folder ".", "/vagrant"

root_path = "provision/foundation/";

# Common setup for all nodes

config.vm.provision "shell", path: root_path + "10-common.sh"

# Node definitions

nodes = [

{ name: "k8s-cp-01", ip: "192.168.56.10", script: "30-control-panel.sh", memory: 3072 },

{ name: "k8s-node-01", ip: "192.168.56.11", script: "40-join-node.sh", memory: 2048 },

{ name: "k8s-node-02", ip: "192.168.56.12", script: "40-join-node.sh", memory: 2048 },

{ name: "k8s-node-03", ip: "192.168.56.13", script: "40-join-node.sh", memory: 2048 },

{ name: "k8s-node-04", ip: "192.168.56.14", script: "40-join-node.sh", memory: 2048 },

]

# Create VMs

nodes.each do |node|

config.vm.define node[:name] do |node_vm|

node_vm.vm.provider "virtualbox" do |vb|

vb.cpus = 2

vb.memory = node[:memory]

end

node_vm.vm.hostname = node[:name]

node_vm.vm.network "private_network", ip: node[:ip]

node_vm.vm.provision "shell", path: root_path + "20-node-network.sh", args: node[:ip]

node_vm.vm.provision "shell", path: root_path + "#{node[:script]}"

end

end

end

1️⃣ Overview

This Vagrantfile provisions :

-

Creates 5 Ubuntu 22.04 VMs

-

Installs Docker, kubeadm, kubelet, kubectl

-

Initializes Kubernetes control plane

-

Joins 4 worker nodes

-

Configures Calico CNI networking

2️⃣ Global Configuration

Vagrant.configure("2") do |config|

config.vm.box = "ubuntu/jammy64"

config.vm.synced_folder ".", "/vagrant"

-

Vagrant.configure("2")→ Uses configuration syntax version 2. -

config.vm.box→ Every VM uses Ubuntu 22.04 LTS (“jammy64”). -

config.vm.synced_folder→ Shares your project folder on the host with each guest VM at/vagrant.

3️⃣ Common Provisioning

root_path = "provision/foundation/"

config.vm.provision "shell", path: root_path + "10-common.sh"

Runs once globally for all machines to install baseline packages, set host files, etc.

4️⃣ Cluster node setup

nodes = [

{ name: "k8s-cp-01", ip: "192.168.56.10", script: "30-control-panel.sh", memory: 3072 },

{ name: "k8s-node-01", ip: "192.168.56.11", script: "40-join-node.sh", memory: 2048 },

{ name: "k8s-node-02", ip: "192.168.56.12", script: "40-join-node.sh", memory: 2048 },

{ name: "k8s-node-03", ip: "192.168.56.13", script: "40-join-node.sh", memory: 2048 },

{ name: "k8s-node-04", ip: "192.168.56.14", script: "40-join-node.sh", memory: 2048 },

]

Defines five nodes for node creation loop.

5️⃣ Node Creation Loop

nodes.each do |node|

config.vm.define node[:name] do |node_vm|

node_vm.vm.provider "virtualbox" do |vb|

vb.cpus = 2

vb.memory = node[:memory]

end

node_vm.vm.hostname = node[:name]

node_vm.vm.network "private_network", ip: node[:ip]

node_vm.vm.provision "shell", path: root_path + "20-node-network.sh", args: node[:ip]

node_vm.vm.provision "shell", path: root_path + "#{node[:script]}"

end

end

For each node:

-

Allocates cpus = 2 and memory

-

Defines a named VM.

-

Sets hostname inside the guest.

-

Configures a private network on

192.168.56.0/24. -

Runs

setup-node-network.shto configure IPs,/etc/hosts, etc. -

Runs role-specific script (

setup-controller.shorsetup-node.sh).

6️⃣ Build the Cluster

Run vagrant up to start provisioning.

cd k8s

vagrant up

🕒 Expected duration: 10–15 minutes.

Verify all VMs are running:

vagrant status

7️⃣ Provisioning Scripts Deep Dive

Let’s take a closer look at the shell scripts used during provisioning.

10-common.sh

The script configures the operating system’s memory settings, installs the required Kubernetes components, and sets up the environment for Kubernetes networking.

# Disable Swap

sudo swapoff -a

sudo sed -i '/ swap / s/^/#/' /etc/fstab

# Install Core Dependencies

sudo apt-get install -y kubelet kubeadm containerd

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

sudo cat <<'EOF' | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

Purpose

1. Disable Swap Ensures Kubernetes can accurately manage memory resources.

2. Add Kubernetes Repository and Install Components

Configures the official Kubernetes APT repo and installs kubelet, kubeadm, and containerd.

3. Setup Kernel Modules

Enables overlay and br_netfilter modules required for container networking and storage layers.

4. Set Kernel Parameters

Adjusts sysctl settings to enable IP forwarding and proper packet handling between bridged interfaces.

20-common.sh

This script configures the Kubernetes node’s network identity by explicitly assigning its IP address to the kubelet service, ensuring proper communication and cluster registration.

NODE_IP=$(ip -4 addr show enp0s8 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

DROPIN_FILE=/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

if ! grep -q -- "--node-ip=$NODE_IP" "$DROPIN_FILE"; then

sudo sed -i "0,/^Environment=\"KUBELET_KUBECONFIG_ARGS=/s|\"$| --node-ip=$NODE_IP\"|" "$DROPIN_FILE"

fi

sudo systemctl daemon-reexec

sudo systemctl daemon-reload

sudo systemctl restart kubelet

When using Vagrant, a default NAT interface (enp0s3) is created for outbound network access. A second, user-defined network interface (enp0s8) is typically added for internal cluster communication. However, Kubernetes may fail to correctly resolve the node’s IP address in this setup, requiring manual configuration.

After testing, the following approach proves effective: explicitly assign the node IP to the enp0s8 interface and configure the kubelet to use this IP. Once applied, the kubelet service starts with the correct node IP address, ensuring reliable communication between cluster components and accurate node registration within the Kubernetes control plane.

30-contol-panel.sh

This script automates the setup of a Kubernetes control plane node in a virtualized environment. It also takes care of installing and configuring essential tools like kubectl, the Container Network Interface (CNI), and a monitoring stack to give you full visibility into your cluster.

sudo apt-get install -y kubectl

# Initialize cluster

sudo kubeadm init --apiserver-advertise-address=192.168.56.10 --pod-network-cidr=10.224.0.0/16

# Setup kubeconfig

mkdir -p /home/vagrant/.kube

cp /etc/kubernetes/admin.conf /home/vagrant/.kube/config

chown vagrant:vagrant /home/vagrant/.kube/config

mkdir -p ~/.kube

cp /etc/kubernetes/admin.conf ~/.kube/config

# Create join command

sudo kubeadm token create --print-join-command > /vagrant/provision/foundation/join-command.sh

# Install Calico network plugin

AUTO_METHOD="cidr=192.168.56.0/24"

curl -O https://raw.githubusercontent.com/projectcalico/calico/v3.30.3/manifests/calico.yaml

kubectl apply -f calico.yaml

kubectl set env daemonset/calico-node -n kube-system IP_AUTODETECTION_METHOD="$AUTO_METHOD"

sudo systemctl restart kubelet

# Install K9s

wget https://github.com/derailed/k9s/releases/latest/download/k9s_linux_amd64.deb

sudo apt install ./k9s_linux_amd64.deb

1. Installs kubectl

Initializes the Kubernetes cluster using kubeadm, specifying the API server advertise address (192.168.56.10) and the Pod network CIDR (10.224.0.0/16).

2. Kubeconfig Configuration

Sets up the Kubernetes configuration (admin.conf) for both the vagrant user and the root user, enabling access to cluster management commands.

3. Node Join Command Generation

Creates and stores the cluster join command in /vagrant/provision/foundation/join-command.sh for worker nodes to join the cluster.

3. Calico Network Plugin Setup

Downloads and applies the Calico manifest to enable networking between pods. Configures Calico’s IP autodetection method to use the local network (cidr=192.168.56.0/24).

4. Kubernetes Management Tool Installation

Installs k9s, a terminal-based Kubernetes cluster management tool

Step 2: Post-Init Setup

SSH into the control plane:

vagrant ssh k8s-cp-01

Run the post-initialization script:

sudo /vagrant/provision/foundation/50-after-vagrant-setup

50-after-vagrant-setup

This script configures functional labels to define node roles(edge, backend, database), and configures essential Kubernetes components with targeted scheduling.

NODES=(k8s-node-01 k8s-node-02 k8s-node-03 k8s-node-04)

kubectl label node "${NODES[0]}" node-role.kubernetes.io/worker-node="" tier=edge --overwrite

kubectl label node "${NODES[1]}" node-role.kubernetes.io/worker-node="" tier=backend --overwrite

kubectl label node "${NODES[2]}" node-role.kubernetes.io/worker-node="" tier=backend --overwrite

kubectl label node "${NODES[3]}" node-role.kubernetes.io/worker-node="" tier=database --overwrite

# Install Local Path Provisioner for dynamic storage provisioning

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

# Install NGINX Ingress Controller

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/cloud/deploy.yaml

# Install MetalLB

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.15.2/config/manifests/metallb-native.yaml

After labeling, it deploys several core infrastructure components:

-

Local Path Provisioner – Enables dynamic storage provisioning

-

NGINX Ingress Controller – Provides ingress routing

-

MetalLB – Implements Layer 2 load balancing with controller deployment

Verify:

kubectl get nodes --show-labels

kubectl get pods -A

Step 3: Deploy Infrastructure

Apply storage and networking configuration.

Run Deployment

kubectl apply -f /vagrant/provision/deployment/standard/infra

What’s Inside

Confiuration Review

10-storage-class.yml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: vm-storage

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

This manifest defines a StorageClass named vm-storage that uses the Rancher Local Path Provisioner to dynamically create node-local PersistentVolumes. It sets volumeBindingMode: WaitForFirstConsumer so volume provisioning is deferred until a pod is scheduled, ensuring the PV is created on the same node as the workload. The reclaimPolicy: Delete cleans up underlying storage when the PersistentVolumeClaim is removed.

20-metallb.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default-address-pool

namespace: metallb-system

spec:

addresses:

- 192.168.56.240-192.168.56.250

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default-l2-advert

namespace: metallb-system

In cloud environments, services like AWS or GCP automatically provide load balancers to expose your applications to the outside world. But on bare-metal or virtualized Kubernetes clusters, you don’t get that luxury out of the box — and that’s where MetalLB steps in.

The manifest configures MetalLB to handle external traffic just like a cloud load balancer would. It defines an IPAddressPool that allocates IPs from 192.168.56.240–192.168.56.250, and an L2Advertisement that announces those addresses at Layer 2 so other devices on the network can reach your services directly.

The result is seamless, cloud-like load balancing for your on-premises or Vagrant-based Kubernetes setups — giving your local cluster the same networking power as a managed one.

Verify:

kubectl get storageclass

kubectl get ipaddresspool -n metallb-system

Step 4: Deploy the Application

In this step, we’re bringing everything together — deploying a complete multi-tier application stack on Kubernetes. This manifests sets up dedicated namespaces, injects configuration data, and applies the necessary RBAC permissions for secure access control. It also provisions a PostgreSQL database backed by persistent storage, then deploys a Spring Boot application with multiple replicas for scalability and resilience.

To make the services accessible, it exposes them through ClusterIP and NodePort, and strengthens cluster security with NetworkPolicies that control how pods communicate. Optionally, it can also install monitoring and maintenance utilities, giving you full visibility and manageability of your application stack — all running seamlessly inside Kubernetes.

Run Deployment

kubectl apply -f /vagrant/provision/deployment/standard/app

What’s Inside

-

10-config.yml— Namespaces, ConfigMaps, Secrets -

20-rbac.yml— RBAC setup -

30-db-deploy.yml— PostgreSQL with PVC -

40-app-deply.yml— Spring Boot app (2 replicas) -

50-services.yml— ClusterIP and NodePort -

60-network-policy.yml— Secure traffic rules -

70-utilities.yml— Optional utilities

Confiuration Review

10-config.yml

apiVersion: v1

kind: Namespace

metadata: { name: demo }

Creates a demo namespace for isolating application resources.

20-rbac.yml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: database-rolebinding

namespace: demo

subjects:

- kind: ServiceAccount

name: postgres-sa

namespace: demo

roleRef:

kind: Role

name: database-role

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: app-rolebinding

namespace: demo

subjects:

- kind: ServiceAccount

name: app-sa

namespace: demo

roleRef:

kind: Role

name: app-role

apiGroup: rbac.authorization.k8s.io

The manifest defines two service accounts: database-sa for PostgreSQL and app-sa for the Spring Boot service, enabling least-privilege access and clear separation of duties.

30-db-deploy.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: db-cm

namespace: demo

data:

POSTGRES_USER: postgres

POSTGRES_DB: appdb

APP_USER: appuser

---

apiVersion: v1

kind: Secret

metadata:

name: db-secret

namespace: demo

type: Opaque

stringData:

POSTGRES_PASSWORD: strong-password

APP_PASSWORD: strong-password

---

apiVersion: v1

kind: ConfigMap

metadata:

name: db-init

namespace: dem

data:

00-roles.sql: (skip)

01-db.sql: (skip)

02-schema.sql: (skip)

03-comments.sql: (skip)

04-table.sql: (skip)

---

apiVersion: v1

kind: Service

metadata:

name: postgres-headless

namespace: demo

labels:

app: postgres

spec:

clusterIP: None

ports:

- name: pg

port: 5432

targetPort: 5432

selector:

app: postgres

---

apiVersion: v1

kind: Service

metadata:

name: postgres

namespace: demo

labels:

app: postgres

spec:

type: ClusterIP

ports:

- name: pg

port: 5432

targetPort: 5432

selector:

app: postgres

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgres

namespace: demo

spec:

serviceName: postgres-headless

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

serviceAccountName: database-sa

nodeSelector:

tier: database

containers:

- name: postgres

image: postgres:18

ports:

- containerPort: 5432

name: pg

envFrom:

- secretRef:

name: db-secret

- configMapRef:

name: db-cm

volumeMounts:

- name: db-data

mountPath: /var/lib/postgresql/

- name: run-socket

mountPath: /var/run/postgresql

- name: db-init

mountPath: /docker-entrypoint-initdb.

volumes:

- name: run-socket

emptyDir: {}

- name: db-init

configMap:

name: db-init

volumeClaimTemplates:

- metadata:

name: db-data

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: vm-storage

resources:

requests:

storage: 5Gi

The manifest provisions a single-replica PostgreSQL database as a StatefulSet on the database tier. It uses the database-sa service account, loads environment variables and credentials from ConfigMaps and Secret, runs optional init SQL from a ConfigMap, and persists data via a PersistentVolumeClaim using the vm-storage StorageClass, and exposes the database through two Kubernetes Services: a standard ClusterIP Service postgres for in-cluster access on port 5432, and a headless Service postgres-headless to enable direct pod-to-pod communication and stable DNS resolution—typically.

40-app-deply.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: springboot-cm

namespace: demo

labels:

environment: demo

data:

BPL_JVM_THREAD_COUNT: "100"

JAVA_TOOL_OPTIONS: "-XX:InitialRAMPercentage=25.0 -XX:MaxRAMPercentage=75.0"

LOGGING_LEVEL_ROOT: INFO

SPRING_PROFILES_ACTIVE: prod

SPRING_DATASOURCE_URL: "jdbc:postgresql://postgres.demo.svc.cluster.local:5432/appdb"

---

apiVersion: v1

kind: Secret

metadata:

name: springboot-secret

namespace: demo

labels:

environment: demo

type: Opaque

stringData:

spring.datasource.username: appuser

spring.datasource.password: strong-password

---

apiVersion: v1

kind: Service

metadata:

name: api-svc

namespace: demo

labels:

environment: demo

spec:

selector:

app: api

ports:

- port: 80

targetPort: 8080

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: bank-account-demo

namespace: demo

spec:

replicas: 2

selector:

matchLabels:

app: api

template:

metadata:

labels:

app: api

spec:

serviceAccountName: app-sa

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: tier

operator: In

values:

- backend

topologySpreadConstraints:

- maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchLabels:

app: api

containers:

- name: bank-account-demo

image: docker.io/aratax/bank-account-demo:1.0-native

ports:

- containerPort: 8080

envFrom:

- configMapRef:

name: springboot-cm

- secretRef:

name: springboot-secret

- name: wait-for-database

image: busybox

command: ['sh', '-c', 'until nc -z postgres.demo.svc.cluster.local 5432; do echo waiting; sleep 2; done;']

This manifest provisions a two-replica Spring Boot application deployment in the backend tier. It uses the app-sa service account, loads runtime configuration and credentials from the ConfigMap and Secret, and connects to the PostgreSQL database via the internal DNS endpoint postgres.demo.svc.cluster.local:5432. The deployment includes an init container wait-for-database to ensure the database is reachable before application startup. It exposes the application through a ClusterIP Service named api-svc on port 80. The deployment is set up to run only on backend nodes and to spread its pods evenly across different nodes for better reliability and load balance.

50-services.yml

apiVersion: v1

kind: Service

metadata:

name: database-nodeport

namespace: demo

labels:

environment: demo

spec:

type: NodePort

selector:

app: postgres

ports:

- name: pg

port: 5432

targetPort: 5432

nodePort: 30000

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webapp-ingress

namespace: demo

labels:

environment: demo

spec:

ingressClassName: nginx

rules:

- host: app.demo.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: api-svc

port:

number: 80

The manifest enables both the application and the database to be accessed from inside and outside the cluster. It defines a NodePort Service named database-nodeport that exposes the PostgreSQL database on port 30000 for external access, typically used for development and debugging. It also creates an Ingress resource named webapp-ingress that routes web traffic for app.demo.local to the internal api-svc service, which runs the Spring Boot application on port 80.

Step 5: Review Application Design

To implement a simple banking system, two tables were designed:

-

accounts— stores core account information (owner, currency, balance, etc.). -

ledger_entries— records all debit/credit transactions linked to each account for auditing and reconciliation.

This schema ensures data integrity, supports concurrent balance updates via versioning, and provides immutable transaction history.

Database Table Layout:

+------------------------------------------------------------+

| accounts |

+------------------------------------------------------------+

| Column | Type | Constraints / Default |

|--------------|----------------|----------------------------|

| id | UUID (PK) | DEFAULT uuidv7() |

| owner_name | TEXT | NOT NULL |

| currency | CHAR(3) | NOT NULL |

| balance | NUMERIC(18,2) | NOT NULL DEFAULT 0 |

| version | BIGINT | NOT NULL DEFAULT 0 |

| updated_at | TIMESTAMPTZ | NOT NULL DEFAULT NOW() |

+------------------------------------------------------------+

| INDEX: idx_accounts_owner (owner_name) |

+------------------------------------------------------------+

1

accounts ─────────────┐

│ (fk_ledger_account)

▼

+------------------------------------------------------------+

| ledger_entries |

+------------------------------------------------------------+

| Column | Type | Constraints / Default |

|--------------|----------------|----------------------------|

| id | UUID (PK) | DEFAULT uuidv7() |

| account_id | UUID (FK) | REFERENCES accounts(id) |

| direction | TEXT | NOT NULL |

| amount | NUMERIC(18,2) | NOT NULL CHECK (amount > 0)|

| reason | TEXT | |

| created_at | TIMESTAMPTZ | NOT NULL DEFAULT NOW() |

+------------------------------------------------------------+

SpringBoot:

The Java application provide unified transaction endpoint processes both deposit and withdrawal operations, allowing clients to specify the locking strategy (OPTIMISTIC or PESSIMISTIC) per request.

Rest Endpoints

@RestController

@RequestMapping("/api/accounts")

public class AccountController {

private final AccountService accountService;

public AccountController(AccountService accountService) { this.accountService = accountService;}

@PostMapping(value = "/{id}/transaction", produces = MediaType.APPLICATION_JSON_VALUE)

public ResponseEntity<TransactionResponse> transaction(

@PathVariable UUID id,

@Valid @RequestBody TransactionRequest request) {

TransactionResponse response = accountService.executeTransaction(id, request );

return ResponseEntity.ok(response);

}

}

Rest Example POST /api/accounts/3f93c1c2-1c52-4df5-8c6a-9b0c6d7c5c11/transaction

{

"type": "DEPOSIT",

"amount": 500,

"lockingMode": "OPTIMISTIC",

"reason": "API_DEPOSIT"

}

# or

{

"type": "WITHDRAWAL",

"amount": 300,

"lockingMode": "PESSIMISTIC",

"reason": "API_WITHDRAWAL"

}

Concurrency Control Strategy in JPA The Java application uses JPA (Java Persistence API) — an ORM framework — to interact with a PostgreSQL database while maintaining data integrity during concurrent transactions. It also explores two different locking strategies, described below, to demonstrate how JPA handles concurrency in real-world scenarios.

Optimistic Locking Strategy

The @Version field provides optimistic concurrency control — each update automatically increments the version. When two transactions modify the same Account, the second commit detects a version mismatch and throws an OptimisticLockException, preventing lost updates without requiring database locks. A retry strategy with controlled backoff (5 attempts) can be applied to gracefully handle these transient conflicts.

Entity

@Data

@Entity

@Table(name = "accounts", schema = "app")

public class Account {

@Id @UuidGenerator

private UUID id;

@Column(name = "owner_name", nullable = false)

private String ownerName;

@Column(length = 3, nullable = false)

private String currency;

@Column(nullable = false, precision = 18, scale = 2)

private BigDecimal balance = BigDecimal.ZERO;

@Version

@Column(nullable = false)

private long version;

@Column(name = "updated_at", columnDefinition = "timestamptz", nullable = false)

private Instant updatedAt = Instant.now();

}

Service

@Transactional(isolation= Isolation.READ_COMMITTED, rollbackFor = Exception.class)

@Override

public TransactionResponse execute(UUID id, TransactionType type, BigDecimal amt, String reason) {

var account = accountRepo.findById(id).orElseThrow();

if (TransactionType.DEPOSIT.equals(type))

account.deposit(amt);

else

account.withdraw(amt);

var ledgerEntry = ledgerRepo.save(LedgerEntry.of(account, type, amt, reason));

return TransactionResponse.success(account, ledgerEntry);

}

Pessimistic Locking Strategy

The @Lock(LockModeType.PESSIMISTIC_WRITE) annotation enforces pessimistic locking by issuing a database-level SELECT ... FOR UPDATE query. This explicitly locks the selected Account row until the current transaction completes.

public interface AccountRepository extends JpaRepository<Account, UUID> {

// Pessimistic row lock (SELECT ... FOR UPDATE)

@Lock(LockModeType.PESSIMISTIC_WRITE)

@Query("select a from Account a where a.id = :id")

Optional<Account> findForUpdate(@Param("id") UUID id);

}

Step 6: Access the App

Get the Ingress IP:

kubectl get ingress -n demo

Example output:

NAME CLASS HOSTS ADDRESS PORTS AGE

webapp-ingress nginx app.demo.local 192.168.56.240 80 21h

Then test endpoints from your host:

curl -H "Host: app.demo.local" http://192.168.56.240/actuator/health

Step 7: Testing with k6

In this step, the environment is fully prepared to simulate concurrent bank account transactions and observe how the locking mechanisms behave under load.

You can use the built-in k6 test script to run the simulation. For each test run, you’ll choose an account and a specific locking strategy (for example, optimistic or pessimistic locking). The script then launches 50 virtual users (VUs) running concurrently, using the shared-iterations executor — a total of 100 iterations distributed across all VUs. This setup effectively mimics concurrent access to the same account, allowing you to verify how data integrity is preserved during simultaneous transactions.

To get started, install k6 on your host system:

# Debian/Ubuntu

sudo gpg -k

sudo gpg --no-default-keyring --keyring /usr/share/keyrings/k6-archive-keyring.gpg --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys C5AD17C747E3415A3642D57D77C6C491D6AC1D69

echo "deb [signed-by=/usr/share/keyrings/k6-archive-keyring.gpg] https://dl.k6.io/deb stable main" | sudo tee /etc/apt/sources.list.d/k6.list

sudo apt-get update

sudo apt-get install k6

# Windows

choco install k6

Run the load test:

# host

k6 run -e BASE_URL=`http://192.168.56.240` -e ACCOUNT_ID=`3f93c1c2-1c52-4df5-8c6a-9b0c6d7c5c11` -e MODE=`OPTIMISTIC` test/k6-load-test.js

You will get the output like this:

/\ Grafana /‾‾/

/\ / \ |\ __ / /

/ \/ \ | |/ / / ‾‾\

/ \ | ( | (‾) |

/ __________ \ |_|\_\ \_____/

execution: local

script: k6-load-test.js

output: -

scenarios: (100.00%) 1 scenario, 50 max VUs, 2m30s max duration (incl. graceful stop):

* concurrent_load: 100 iterations shared among 50 VUs (maxDuration: 2m0s, gracefulStop: 30s)

INFO[0000] ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ source=console

INFO[0000] ▶ K6 Load Test for AccountController source=console

INFO[0000] ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ source=console

INFO[0000] ▶ Target base URL : http://192.168.56.240:8080 source=console

INFO[0000] ▶ Account ID : 3f93c1c2-1c52-4df5-8c6a-9b0c6d7c5c11 source=console

INFO[0000] ▶ Locking Mode : OPTIMISTIC source=console

INFO[0000] ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ source=console

INFO[0000] source=console

INFO[0000] 📊 Initial Account State: source=console

INFO[0000] Balance: 10 USD | Version: 494 | Owner: Alice source=console

INFO[0000] ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ source=console

INFO[0000] source=console

ERRO[0000] [ERROR 422] WITHDRAWAL 60 failed: Business rule violation - insufficient funds source=console

INFO[0000] [2025-11-02T13:20:44.705Z] TX:a52d4700-e63a-463c-808e-66bf4132ba26 | DEPOSIT 24 USD | Balance: 34 USD (v494) source=console

INFO[0000] [2025-11-02T13:20:44.709Z] TX:8d001c33-c64a-44eb-b39f-93ed854c6e02 | DEPOSIT 46 USD | Balance: 159 USD (v496) source=console

INFO[0000] [2025-11-02T13:20:44.709Z] TX:ba848747-f5ea-4ebf-b1b2-3c501c44cd2b | DEPOSIT 79 USD | Balance: 113 USD (v495) source=console

INFO[0000] [2025-11-02T13:20:44.723Z] TX:87d8874d-af6c-47a5-9901-823f616e8add | DEPOSIT 10 USD | Balance: 169 USD (v497) source=console

...

skip

...

INFO[0004] ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

INFO[0004] 📊 Final Account State: source=console

INFO[0004] Balance: 501 USD | Version: 582 | Owner: Alice

INFO[0004] 📈 Changes: source=console

INFO[0004] Balance Change: +491 USD source=console

INFO[0004] Version Change: +88

INFO[0004] ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ source=console

INFO[0004] ✅ Test completed successfully! source=console

█ THRESHOLDS

http_req_duration

✓ 'p(95)<2000' p(95)=1.02s

http_req_failed

✗ 'rate<0.1' rate=11.76%

version_conflicts

✓ 'rate<0.3' rate=0.00%

█ TOTAL RESULTS

checks_total.......: 300 74.544329/s

checks_succeeded...: 88.00% 264 out of 300

checks_failed......: 12.00% 36 out of 300

✗ status is 200

↳ 88% — ✓ 88 / ✗ 12

✗ response has account data

↳ 88% — ✓ 88 / ✗ 12

✗ response has transaction data

↳ 88% — ✓ 88 / ✗ 12

CUSTOM

account_balance................: avg=499.056818 min=30 med=506 max=983 p(90)=767.9 p(95)=815.95

deposits_total.................: 48 11.927093/s

other_errors...................: 12 2.981773/s

version_conflicts..............: 0.00% 0 out of 0

withdraws_total................: 52 12.921017/s

HTTP

http_req_duration..............: avg=308.79ms min=10.07ms med=91.88ms max=2.11s p(90)=900.17ms p(95)=1.02s

{ expected_response:true }...: avg=332.89ms min=10.07ms med=99.69ms max=2.11s p(90)=932.24ms p(95)=1.24s

http_req_failed................: 11.76% 12 out of 102

http_reqs......................: 102 25.345072/s

EXECUTION

iteration_duration.............: avg=1.5s min=578.54ms med=1.48s max=3.79s p(90)=2.12s p(95)=2.51s

iterations.....................: 100 24.84811/s

vus............................: 1 min=1 max=50

vus_max........................: 50 min=50 max=50

NETWORK

data_received..................: 94 kB 23 kB/s

data_sent......................: 28 kB 6.9 kB/s

running (0m04.0s), 00/50 VUs, 100 complete and 0 interrupted iterations

concurrent_load ✓ [======================================] 50 VUs 0m04.0s/2m0s 100/100 shared iters

Step 8: Monitoring Kubernetes

Using k9s

k9s is a terminal-based UI for Kubernetes. Instead of typing dozens of kubectl commands, you get a fast, interactive dashboard right inside your terminal — perfect for developers, DevOps engineers, and operators who live in the CLI.

k9s

Useful views:

-

:node– View all nodes -

:pod– View all pods -

:deployment– View deployments -

:service– View services -

:ingress– View ingress rules -

:pv– View persistent volumes -

:pvc– View persistent volume claims -

:event– View cluster events

Step 9: Cleanup

Stop all VMs but keep state:

vagrant halt

Destroy everything (full reset):

vagrant destroy -f

Key Takeaways

-

Infrastructure as Code made simple — Spin up a complete multi-node Kubernetes cluster with one vagrant up. No cloud required.

-

Realistic local lab — Simulate a production-like environment with control plane, workers, networking, storage, and ingress — all from your laptop.

-

Application + Infrastructure synergy — Deploy a real Spring Boot + PostgreSQL system to understand how app logic and cluster behavior interact under load.

-

Data consistency in action — Experiment hands-on with JPA’s optimistic and pessimistic locking strategies to see how concurrency control works in practice.

-

Performance validation — Use k6 to generate concurrent transactions and validate system reliability through real metrics and stress tests.

-

Full observability from the CLI — With k9s, monitor nodes, pods, and resources interactively — no GUI required.

-

Reproducibility and cleanup — Destroy and rebuild your environment anytime with vagrant destroy -f, ensuring consistent test conditions for every run.

Conclusion

We’ve built more than just a demo — we’ve created a fully automated multi-node Kubernetes lab that runs a real Spring Boot + PostgreSQL banking system with live networking, storage, and load testing. From Vagrant provisioning to JPA locking strategies and k6 concurrency simulations, every layer demonstrates how consistency and automation come together in modern systems.

This setup isn’t about production readiness — it’s about understanding. You now have a reproducible playground to experiment with distributed transactions, concurrency control, and cluster operations — all on your own machine. It’s a hands-on way to learn how reliability and scalability emerge when software, data, and infrastructure align.

Resources

🧡 “Build it. Break it. Rebuild it — that’s how real engineering insight is forged.”

— ArataX