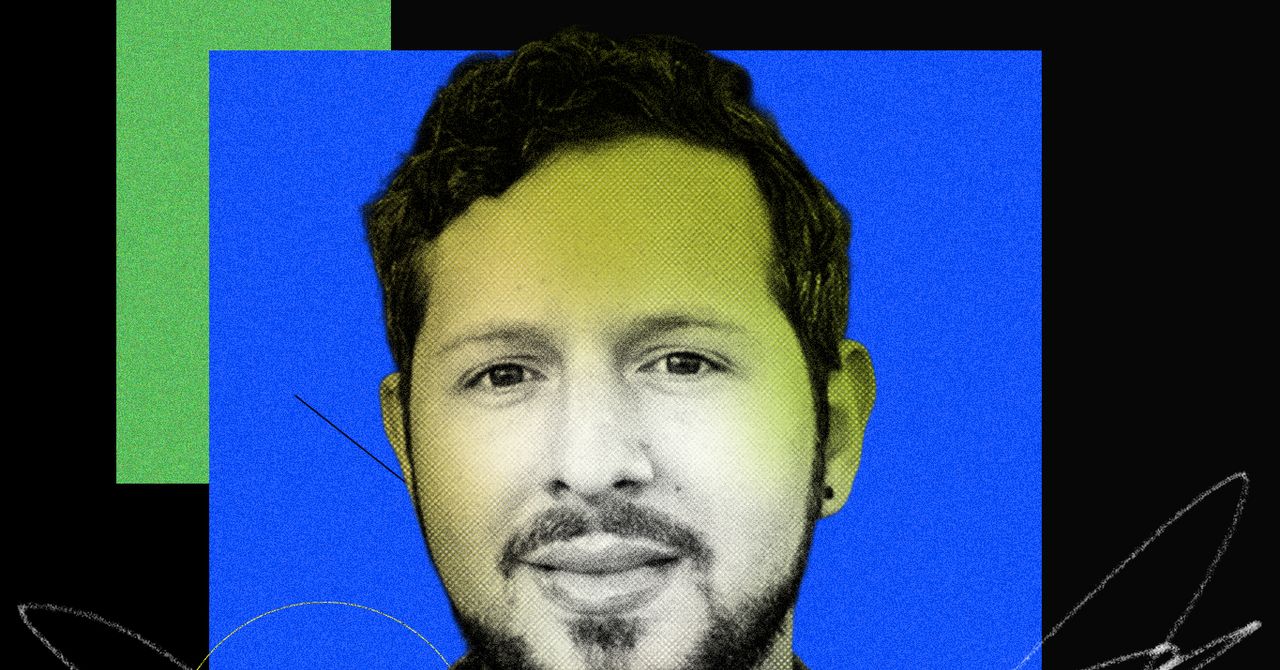

When the history of AI is written, Steven Adler may just end up being its Paul Revere—or at least, one of them—when it comes to safety.

Last month Adler, who spent four years in various safety roles at OpenAI, wrote a piece for The New York Times with a rather alarming title: “I Led Product Safety at OpenAI. Don’t Trust Its Claims About ‘Erotica.’” In it, he laid out the problems OpenAI faced when it came to allowing users to have erotic conversations with chatbots while also protecting them from any impacts those interactions could have on their mental health. “Nobody wanted to be the morality police, but we lacked ways to measure and manage erotic usage carefully,” he wrote. “We decided AI-powered erotica would have to wait.”

Adler wrote his op-ed because OpenAI CEO Sam Altman had recently announced that the company would soon allow “erotica for verified adults.” In response, Adler wrote that he had “major questions” about whether OpenAI had done enough to, in Altman’s words, “mitigate” the mental health concerns around how users interact with the company’s chatbots.

After reading Adler’s piece, I wanted to talk to him. He graciously accepted an offer to come to the WIRED offices in San Francisco, and on this episode of The Big Interview, he talks about what he learned during his four years at OpenAI, the future of AI safety, and the challenge he’s set out for the companies providing chatbots to the world.

This interview has been edited for length and clarity.

KATIE DRUMMOND: Before we get going, I want to clarify two things. One, you are, unfortunately, not the same Steven Adler who played drums in Guns N’ Roses, correct?

STEVEN ADLER: Absolutely correct.

OK, that is not you. And two, you have had a very long career working in technology, and more specifically in artificial intelligence. So, before we get into all of the things, tell us a little bit about your career and your background and what you’ve worked on.

I’ve worked all across the AI industry, particularly focused on safety angles. Most recently, I worked for four years at OpenAI. I worked across, essentially, every dimension of the safety issues you can imagine: How do we make the products better for customers and rule out the risks that are already happening? And looking a bit further down the road, how will we know if AI systems are getting truly extremely dangerous?

hemsmegqhxrsmrlsoheseiizovmvds