This is a submission for the Google AI Studio Multimodal Challenge

What I Built

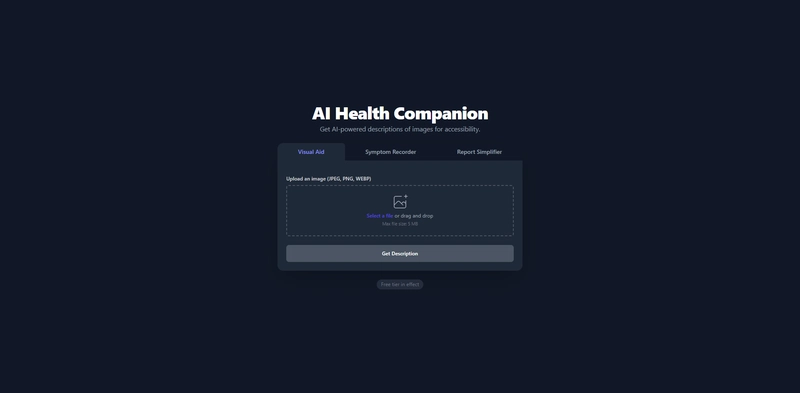

I built AI Health Companion, an accessibility-focused applet designed to support patients and caregivers with three core modes:

- Visual Aid: Users upload an image, and the app describes the scene in plain language, highlighting important objects and potential hazards. In addition to the text description, users can listen to the description as an audio file, making it even more accessible for people with visual impairments.

-

Symptom Recorder: Users record or upload a short audio clip of their symptoms. The app transcribes the speech and summarizes the key symptoms in simple terms.

-

Report Simplifier: Users upload a PDF or image of a lab report, and the app provides a plain-language explanation of key information with a glossary of terms. To make the experience more helpful, users can listen to the simplified explanation and also download the simplified report as a PDF for sharing or offline use.

Together, these modes address real problems faced by patients with vision loss, elderly users who communicate better verbally, and anyone struggling with complex medical documents.

Key Features

-

Visual Aid with audio playback: Scene description plus text-to-speech output.

-

Symptom Recorder: Audio-to-text transcription and symptom summarization.

-

Report Simplifier with audio + PDF download: Plain-language explanations that can be listened to or saved as a PDF.

-

Strict input limits: 5 MB max for images and 2 MB max for PDFs to stay within the Gemini free tier.

-

Deployed directly from Google AI Studio to Cloud Run for a seamless build-and-deploy pipeline.

Demo

🔗 Live Applet on Cloud Run: https://ai-health-companion-390658277222.us-west1.run.app/

🖼️ Screenshots:

👁️ Visual Aid

📝 Symptom Recorder

📊 Report Simplifier

How I Used Google AI Studio

-

I created the applet in Google AI Studio’s Build mode, where I designed and refined the prompts for each mode.

-

I specified structured JSON outputs directly in the system instructions to ensure reliable parsing.

-

Once the applet was stable, I used AI Studio’s one-click deployment to deploy it directly to Google Cloud Run.

Models Used

-

Gemini 2.5 Flash was the default model, chosen for speed and efficiency.

-

For more complex reasoning tasks (like analyzing detailed reports), the applet optionally supports Gemini 2.5 Pro if enabled.

-

Both models’ multimodal capabilities were leveraged for image understanding, audio transcription, and text simplification.

Multimodal Features

-

Image Understanding (Visual Aid): Gemini processes uploaded images to describe content, list objects, and identify hazards. Added feature: users can listen to the description as speech.

-

Audio Understanding (Symptom Recorder): Gemini transcribes patient voice notes and summarizes them into key symptoms.

-

Document + Image Understanding (Report Simplifier): Gemini explains lab reports in everyday language with glossary terms. Added features: users can listen to the simplified explanation and download the result as a PDF.

By combining image, audio, and text processing through Gemini 2.5 Flash/Pro, the applet delivers a practical, real-world healthcare companion experience that is lightweight, privacy-friendly, and accessible.