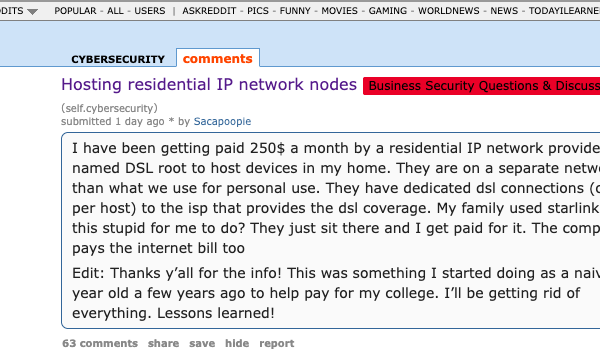

Student-Teacher Distillation: A Complete Guide for Model Compression

Part 1 of our Deep Learning Model Optimization Series In the rapidly evolving world of machine learning, deploying large, powerful models in production environments often presents significant challenges. Enter student-teacher distillation—a powerful technique that allows us to compress the knowledge of complex models into smaller, more efficient ones without sacrificing too much accuracy. This comprehensive…