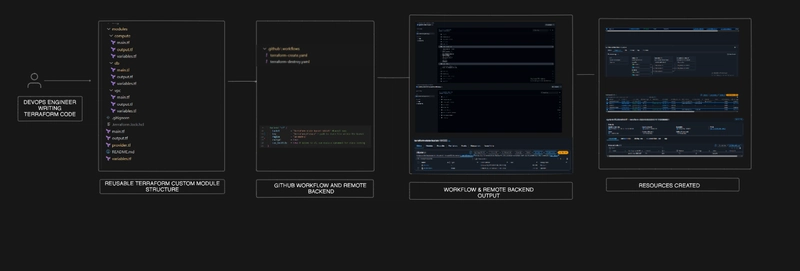

Infrastructure automation is at the heart of modern DevOps. I moved beyond just running Terraform apply locally and created a fully automated, modular, and version-controlled infrastructure workflow using Terraform, AWS, and GitHub Actions.

This project provisions AWS infrastructure through custom Terraform modules, manages Terraform state securely with S3 as a remote backend, leverages S3’s native state locking mechanism, and automates the provisioning and destruction process through GitHub Actions.

This project simulates a production-ready Infrastructure as Code (IaC) workflow that teams can use for scalable, consistent, and automated deployments.

It also prepares the foundation for the next phase: Automated Multi-Environment Deployment with Terraform & CI/CD, which I am currently building.

Prerequisites

- Basic understanding of Terraform (providers, modules, state, variables).

- AWS account with:

- An S3 bucket for remote backend storage.

- Programmatic access via IAM user or role.

- GitHub repository for automation.

- GitHub Actions runner permissions to deploy to AWS.

- Terraform.

- IDE (VS Code recommended).

Key Terms to Know

-

Infrastructure as Code (IaC): used to manage and provision cloud infrastructure through code instead of manual processes.

-

Terraform Module: A reusable, version-controlled block of Terraform configurations that defines one piece of infrastructure.

-

Remote Backend: A centralised storage for Terraform state files (in this case, AWS S3).

-

State Locking: Prevents concurrent updates to your infrastructure.

-

GitHub Actions: CI/CD tool that automates workflows like terraform plan and terraform apply.

Project Overview

The project is built to demonstrate how to structure Terraform code into custom modules, manage state remotely, and automate the provisioning/destruction process through CI/CD.

How Modules Communicate (Data Exchange Between Modules)

Terraform does not allow referencing one child module’s resources (each child module is meant to be self-contained and reusable) directly inside another child module. Instead, outputs and inputs are used with the root module acting as the connector.

In a child module, variables are declared. In the root module, when the child module is called, you must provide values for those variables (unless the child module defines defaults).

For example, consider a VPC child module and an EC2 child module; the EC2 child module will require a subnet ID, which is generated by the VPC module. The best way to do this is to:

Solutions My Project Solved

-

Terraform state conflicts or lost files: I used S3 remote backend for centralised state management, and also leveraged S3’s native state locking feature that allows S3 to manage state locks directly, eliminating the need for a separate DynamoDB table. This simplifies the backend configuration and reduces the associated costs and infrastructure complexity. S3’s native state locking feature manages the lock so only one

terraform planorapplycan run at a time.

-

Code duplication: I modularised my Terraform resources into reusable custom modules.

-

Manual provisioning of resources: I automated resource provisioning with GitHub Actions workflow. To make the workflow seamless, I added the needed credentials as repository secrets.

-

Lack of CI/CD integration: I initialised Terraform, validated my code, then implemented Terraform plan and apply pipelines in GitHub actions. I also added a manual trigger to destroy the resources.

Challenges and Fixes

-

Error: I encountered a permission error while trying to use an S3 bucket as a remote backend.

-

Fix: I added an inline policy obeying the principle of least privilege (giving the IAM user permissions necessary to carry out the role).

Lessons Learned

- Modularisation makes scaling Terraform projects easier.

- Remote backends are crucial for team collaboration.

- Outputs and variables are the lifeblood of inter-module communication.

- Automation does not equal speed alone; it also adds consistency and traceability.

Conclusion

This project laid the foundation for a fully automated Infrastructure as Code workflow, from custom modules to remote state management and CI/CD automation.

In my next article, I will automate multi-environment deployment with Terraform & CI/CD, where environments like Dev, Staging, and Production are automatically provisioned using the same pipeline.

Need Terraform code? Check it here

Did this help you understand how Terraform modules communicate? Drop a comment!