By now, my Cloud Resume journey had already given me:

- ✅ A strong AWS foundation (Chunk 0)

- ✅ A static front-end (Chunk 1)

- ✅ A serverless API visitor counter (Chunk 2)

- ✅ Automated deployments + smoke testing (Chunk 3)

But there was still one major gap: my infrastructure wasn’t yet described in code, and my software supply chain needed extra hardening.

This chunk was all about professional-grade DevOps practices:

- Use Terraform to manage AWS resources as code.

- Secure the supply chain for my build + deployment pipeline.

- Document the system with architecture diagrams.

- Share the final product + GitHub repo for transparency.

🌍 Terraforming the Cloud Resume

ClickOps (manually clicking through the AWS console) works when learning, but in production it’s a recipe for drift and risk.

Terraform solved that.

I converted every major AWS component into modular Terraform code, covering:

-

S3 — hosts static assets with public access disabled, versioning enabled, and

force_destroy = falseto prevent accidental wipes. - CloudFront — handles global delivery via an Origin Access Control (OAC), with ACM-managed TLS certificates and custom error responses.

- DynamoDB — stores visit counts and Terraform’s remote state locking.

- Lambda + API Gateway — backend logic for the visitor counter API.

- IAM roles & policies — fine-grained permissions following the least-privilege principle.

- Route53 & ACM — DNS management and SSL provisioning for the custom domain.

Example Terraform snippet for the S3 bucket module:

resource "aws_s3_bucket" "resume_site" {

bucket = "my-cloud-resume-site"

acl = "private"

versioning {

enabled = true

}

lifecycle_rule {

id = "expire-old-versions"

enabled = true

noncurrent_version_expiration {

days = 30

}

}

}

With Terraform, my infrastructure is now:

- Repeatable → the entire stack can be recreated in minutes.

- Auditable → every change tracked via Git history.

- Secure → hardening built directly into the configuration.

- Modular → easily reused for staging or new environments.

🧱 Remote state is stored in S3 and locked in DynamoDB to avoid concurrent changes during CI/CD runs, the same pattern used in enterprise environments.

📖 Reference: Terraform Extension — Cloud Resume Challenge

🔐 Securing the Software Supply Chain

A DevOps pipeline is only as secure as its weakest link, so I focused on supply chain hardening this round.

Here’s how I reinforced mine:

- Removed long-lived AWS credentials → replaced with OIDC for GitHub Actions (introduced in Chunk 3).

-

Pinned GitHub Actions versions → no

@latesttags, reducing risk of malicious updates. - Checksum verification for dependencies → ensuring integrity of Hugo themes, Node modules, and Terraform providers.

- IAM permissions scoped tightly → CI/CD roles can deploy only the resources they own.

- Lifecycle + versioning policies → applied to S3 and artifacts for rollback and forensic traceability.

Every deployment now runs with temporary, scoped credentials and logs all actions through AWS CloudTrail.

📖 Reference: Securing Your Software Supply Chain — Cloud Resume Challenge

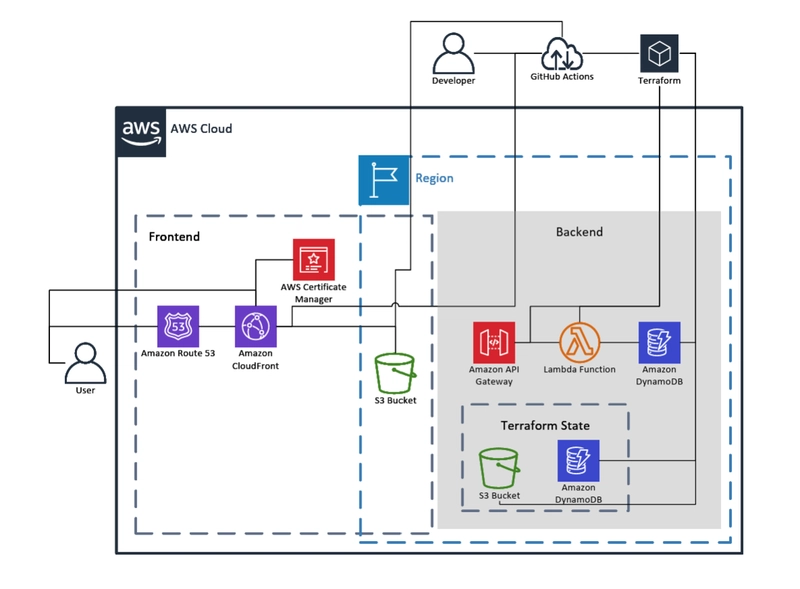

🏗️ Architecture Diagrams

Sometimes a visual story says more than 1,000 lines of Terraform.

These diagrams document how the system works, end to end.

🔹 High-Level Overview

- Frontend: Hugo → S3 → CloudFront

- Backend: API Gateway → Lambda → DynamoDB

- CI/CD: GitHub Actions (OIDC) → Terraform → AWS

- Monitoring: Billing alerts, versioning, and logs baked in

🔹 Front-end

- Static files stored in S3 with encryption + access via CloudFront OAC

- Lifecycle rules manage old versions

- Cache invalidation triggered automatically on deployment

🔹 Back-end

- Lambda handles visitor tracking logic

- DynamoDB stores unique + total hits

- API Gateway exposes secure endpoints with error fallback

🔹 S3 Lifecycle Management

- Older noncurrent objects automatically transition to cheaper storage classes

- Retention and cleanup policies guard against bloat and accidental deletion

🧱 AWS Account Structure & Hardening

Beyond just code, I also aligned my AWS account structure with production-grade practices:

-

AWS Organization with

productionandtestOUs. - Separate accounts for isolated workloads.

- MFA enforced across all users.

- Root account locked and only used for billing.

- Budget alerts trigger at $0.01 to catch any unintended spend early.

This structure ensures that even as the project scales, security, cost control, and governance remain intact.

🌐 View the Final Product

✨ Live Resume Website: https://www.trinityklein.dev/

📂 GitHub Repository: https://github.com/tlklein/portfolio-website/

Both the code and infrastructure are public, versioned, and reproducible.

🚀 Lessons Learned in Chunk 4

By completing this chunk, I achieved:

✅ Infrastructure as Code with Terraform

✅ Stronger software supply chain security

✅ Visual documentation via architecture diagrams

✅ Multi-account AWS setup for safety + scalability

✅ A fully public and auditable portfolio project

At this stage, the Cloud Resume Challenge evolved from a portfolio exercise into a production-ready personal platform.

📚 Helpful Resources

Resources that helped along the way:

💬 Let’s Connect

That wraps up Chunk 4 of the Cloud Resume Challenge! 🎉

Which IaC tool do you prefer for your cloud builds, Terraform, CDK, or CloudFormation?

Drop your thoughts below, I’d love to compare notes with other builders. 👇

Would you like me to turn this version into a LinkedIn article format next (optimized for publishing directly on LinkedIn with preview sections, emojis, and image layout cues)? It would pair perfectly with your series posts.

🫰🏻 Let’s Connect

If you’re following this challenge, or just passing by, I’d love to connect!

I’m always happy to help if you need guidance, want to swap ideas, or just chat about tech. 🚀

I’m also open to new opportunities, so if you have any inquiries or collaborations in mind, let me know!