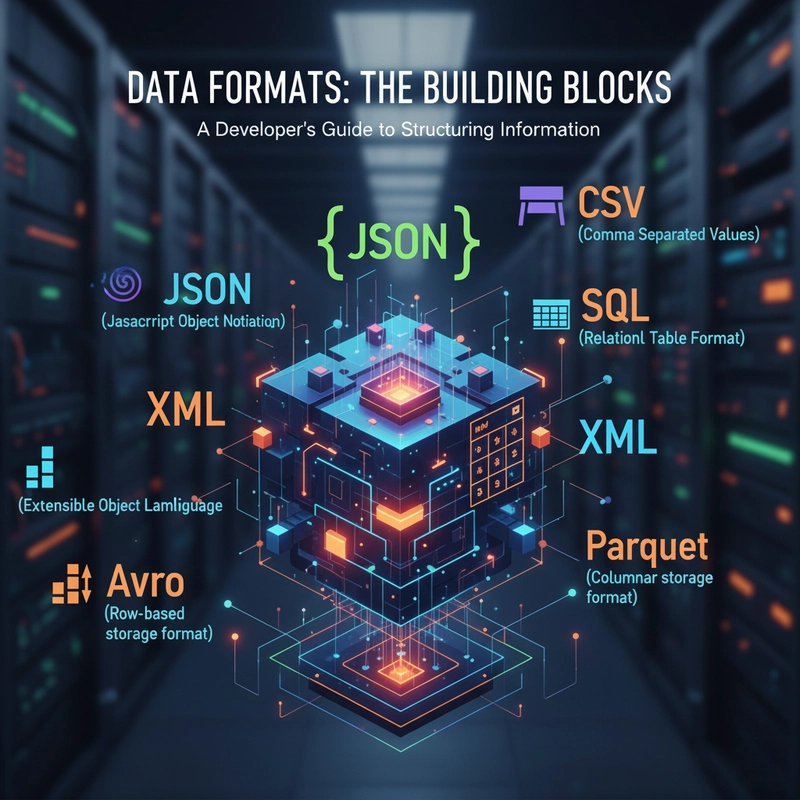

Picking the right data format can make your analytics workflow faster, leaner, and easier to manage. Whether you’re wrangling data in the cloud or locally, understanding how data is structured is key. In this post, we’ll dive into six popular formats: CSV, SQL (Relational Tables), JSON, Parquet, XML, and Avro.

To keep it practical, I’ve created a small dataset of student exam records with four fields: name (string), register_number (integer), subject (string), and marks (integer). The dataset is:

- Alice, 101, Math, 90

- Bob, 102, Science, 85

- Charlie, 103, Math, 95

For each format, I’ll explain what it is, show the dataset in a consistent, readable way (using text or tables, with code blocks only for file-based formats or scripts), and list pros and cons.

1. CSV (Comma-Separated Values)

CSV is the simplest format around. It’s a text file where each row is a line, and values are separated by commas. You’ve probably opened one in Excel or Pandas. It’s super easy to use but doesn’t enforce data types, which can cause issues with complex data.

Dataset in CSV:

name,register_number,subject,marks

Alice,101,Math,90

Bob,102,Science,85

Charlie,103,Math,95

Pros

- Human-readable and easy to edit

- Supported by every tool (Pandas, Spark, Excel)

- Perfect for quick data sharing

Cons

- No schema or type enforcement

- Inefficient for large or nested datasets

2. SQL (Relational Table Format)

SQL organizes data in relational database tables, with columns defining data types and rows as records. It’s not a file format but a structured way to store and query data using SQL commands.

Dataset in SQL:

CREATE TABLE students (

name VARCHAR(255),

register_number INT,

subject VARCHAR(255),

marks INT

);

INSERT INTO students (name, register_number, subject, marks)

VALUES

('Alice', 101, 'Math', 90),

('Bob', 102, 'Science', 85),

('Charlie', 103, 'Math', 95);

| name | register_number | subject | marks |

|---|---|---|---|

| Alice | 101 | Math | 90 |

| Bob | 102 | Science | 85 |

| Charlie | 103 | Math | 95 |

Pros

- Strict data types and schema

- Optimized for querying and joins

- Ideal for structured analytics

Cons

- Requires a database system

- Overkill for simple tasks

3. JSON (JavaScript Object Notation)

JSON is a text-based format using key-value pairs and arrays. It’s lightweight, supports nested data, and is a staple for APIs and NoSQL databases like MongoDB.

Dataset in JSON:

[

{"name": "Alice", "register_number": 101, "subject": "Math", "marks": 90},

{"name": "Bob", "register_number": 102, "subject": "Science", "marks": 85},

{"name": "Charlie", "register_number": 103, "subject": "Math", "marks": 95}

]

Pros

- Flexible for semi-structured or nested data

- Easy to parse in Python, JavaScript, etc.

- Common in APIs and web apps

Cons

- Verbose, increasing file size

- Slower for analytics than binary formats

4. Parquet (Columnar Storage Format)

Parquet is a binary, columnar format optimized for analytics. It stores data by columns, not rows, which boosts compression and query speed in tools like Apache Spark or Pandas.

Dataset in Parquet:

import pandas as pd

import pyarrow as pa

import pyarrow.parquet as pq

data = {

'name': ['Alice', 'Bob', 'Charlie'],

'register_number': [101, 102, 103],

'subject': ['Math', 'Science', 'Math'],

'marks': [90, 85, 95]

}

df = pd.DataFrame(data)

table = pa.Table.from_pandas(df)

pq.write_table(table, 'students.parquet')

Pros

- Efficient for columnar queries and compression

- Great for big data tools (Spark, Dask)

- Supports complex schemas

Cons

- Not human-readable

- Requires specialized libraries

5. XML (Extensible Markup Language)

XML uses tags to create a tree-like structure. It’s customizable, supports hierarchies, and is common in enterprise systems and web services.

Dataset in XML:

Pros

- Supports complex, hierarchical data

- Extensible with custom tags

- Common in enterprise systems

Cons

- Verbose and slow to process

- Less popular than JSON for modern apps

6. Avro (Row-based Storage Format)

Avro is a binary, row-based format for efficient serialization. It embeds a schema with the data, making it self-describing and ideal for schema evolution.

Dataset in Avro:

import fastavro

schema = {

"type": "record",

"name": "Student",

"fields": [

{"name": "name", "type": "string"},

{"name": "register_number", "type": "int"},

{"name": "subject", "type": "string"},

{"name": "marks", "type": "int"}

]

}

records = [

{"name": "Alice", "register_number": 101, "subject": "Math", "marks": 90},

{"name": "Bob", "register_number": 102, "subject": "Science", "marks": 85},

{"name": "Charlie", "register_number": 103, "subject": "Math", "marks": 95}

]

with open('students.avro', 'wb') as out:

fastavro.writer(out, schema, records)

Pros

- Compact and fast for serialization

- Supports schema evolution

- Ideal for streaming (e.g., Kafka)

Cons

- Not human-readable

- Requires specific libraries

Final Thoughts

- CSV: Simple, portable, but lacks schema.

- SQL: Structured and powerful for querying, but tied to databases.

- JSON: Flexible, widely used in APIs.

- Parquet: Best for analytics, efficient storage.

- XML: Verbose, but still used in legacy systems.

- Avro: Schema-driven, compact, common in streaming and big data pipelines.

Which Format Should You Choose?

| Use Case | Recommended Format |

|---|---|

| Quick prototyping | CSV |

| Relational analytics | SQL |

| API integrations | JSON |

| Big Data analytics | Parquet |

| Enterprise systems | XML |

| Data pipelines/streaming | Avro |

Choosing the right format depends on your system and use case.

Pro Tip: In cloud environments, Parquet and Avro are top picks for efficiency, while CSV and JSON are great for prototyping.

What’s your favorite data format? Got a killer use case? Drop it in the comments! 👇