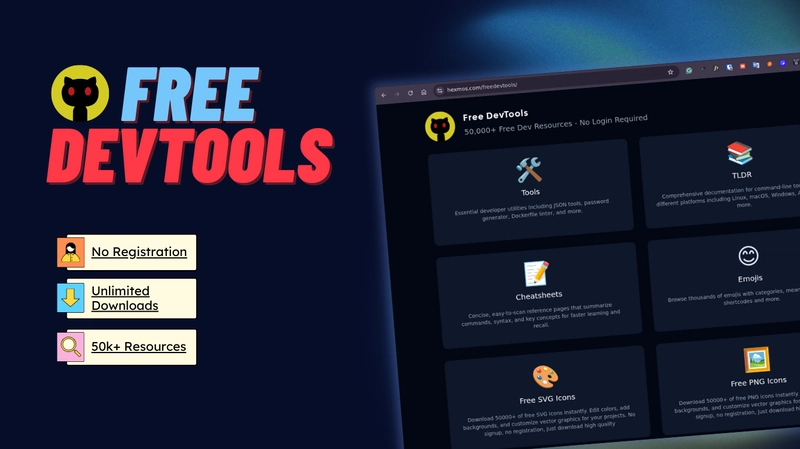

Hello, I’m Maneshwar. I’m working on FreeDevTools online currently building *one place for all dev tools, cheat codes, and TLDRs* — a free, open-source hub where developers can quickly find and use tools without any hassle of searching all over the internet.

Before diving into next-sitemap, it’s helpful to recall why sitemaps and robots.txt are valuable:

- A sitemap.xml (or a set of sitemaps) helps search engines (Google, Bing, etc.) discover the URLs in your site, know when pages change (via

- A robots.txt file tells crawlers which paths they should or should not access. It can also point to the sitemap(s).

- For modern frameworks like Next.js, which may have a mix of static pages, dynamic routes, server-side generated pages, etc., manually maintaining sitemap + robots.txt can be error-prone and tedious.

next-sitemap automates this process for Next.js apps, generating sitemaps and robots.txt based on your routes and configuration.

The GitHub README describes it as: “Sitemap generator for next.js. Generate sitemap(s) and robots.txt for all static/pre-rendered/dynamic/server-side pages.”

At a high level, next-sitemap is a utility that:

- Parses your project (static, dynamic, SSR routes) and produces sitemap XML files (splitting if needed, indexing, etc.).

- Optionally creates a

robots.txtfile that references the sitemap(s) and defines crawl rules (Allow, Disallow). - Provides hooks/customizations (transform, exclude, additional paths, custom policies).

- Supports server-side dynamic sitemaps in Next.js (via API routes or Next 13 “app directory” routes).

Thus, instead of manually writing and updating your sitemap + robots.txt as your site evolves, you let next-sitemap keep them in sync automatically (or semi-automatically) based on config.

Some key benefits:

- Less manual work / fewer mistakes: You don’t need to hand-craft XML or remember to update robots whenever routes change.

- Consistency: The tool standardizes how your sitemap and robots are built.

- Scalability: It can split large sitemap files, index them, and handle dynamic route generation.

- Flexibility: You can override behavior (exclude some paths, set different priorities, add extra sitemap entries, control crawl policies).

- Next.js integration: Works with both “pages” directory and new “app” directory / Next 13+, and supports server-side generation APIs for dynamic content.

In short: next-sitemap is the bridge between Next.js routes and proper SEO-friendly sitemap & robots files.

Installing and basic setup

Here is a step-by-step for getting started.

1. Install the package

yarn add next-sitemap

# or npm install next-sitemap

2. Create a config file

At the root of your project, add next-sitemap.config.js (or .ts). A minimal example:

/** @type {import('next-sitemap').IConfig} */

module.exports = {

siteUrl: process.env.SITE_URL || 'https://example.com',

generateRobotsTxt: true, // whether to generate robots.txt

// optionally more options below...

}

By default, generateRobotsTxt is false, so if you want a robots.txt, you must enable this.

3. Hook it into your build process

In your package.json:

{

"scripts": {

"build": "next build",

"postbuild": "next-sitemap"

}

}

So after the Next.js build step, the next-sitemap command runs to generate the sitemap(s) and robots.txt.

If you’re using pnpm, due to how postbuild scripts are handled, you might also need a .npmrc file containing:

enable-pre-post-scripts=true

This ensures that the postbuild script runs properly.

4. Output placement & indexing

By default, the generated files go into your public/ folder (or your outDir) so that they are served statically.

next-sitemap v2+ uses an index sitemap by default: instead of one big sitemap.xml listing all URLs, you get sitemap.xml that references other sitemap-0.xml, sitemap-1.xml, etc., if split is needed.

If you prefer not to use an index sitemap (for simpler / small sites), you can disable index generation:

generateIndexSitemap: false

Configuration options: customizing lastmod, priority, changefreq, splitting, excludes, etc.

To make your sitemap more useful, next-sitemap offers many configuration options. Here are the common ones and how to use them.

| Option | Purpose | Default | Example / notes |

|---|---|---|---|

siteUrl |

Base URL of your site (e.g. https://yourdomain.com) |

— | Required |

changefreq |

Default daily, weekly) |

"daily" |

You can also override per-path via transform function |

priority |

Default |

0.7 |

Also override per-path if needed |

sitemapSize |

Max number of URLs in a single sitemap file before splitting | 5000 |

Set to e.g. 7000 to allow 7,000 URLs per file ([GitHub][1]) |

generateRobotsTxt |

Whether to produce robots.txt file |

false |

If true, robots.txt will include sitemap(s) and your specified policies |

exclude |

List of path patterns (relative) to omit from sitemap | [] |

e.g. ['/secret', '/admin/*']

|

alternateRefs |

For multilingual / alternate URLs (hreflang) setup | [] |

Provide alternate domain/hreflang pairs |

transform |

A custom function called for each path to allow modifying or excluding individual entries | — | If transform(...) returns null, the path is excluded; else return an object that can include loc, changefreq, priority, lastmod, alternateRefs etc. ([GitHub][1]) |

additionalPaths |

A function returning extra paths to include in sitemap (beyond routes detected) | — | Useful if some pages are not part of route detection but still should be in sitemap ([GitHub][1]) |

robotsTxtOptions |

Customization for robots.txt (policies, additionalSitemaps, includeNonIndexSitemaps) | — | See below for details |

Example full config

Here is an example combining many features:

/** @type {import('next-sitemap').IConfig} */

module.exports = {

siteUrl: 'https://example.com',

changefreq: 'weekly',

priority: 0.8,

sitemapSize: 7000,

generateRobotsTxt: true,

exclude: ['/secret', '/protected/*'],

alternateRefs: [

{ href: 'https://es.example.com', hreflang: 'es' },

{ href: 'https://fr.example.com', hreflang: 'fr' }

],

transform: async (config, path) => {

// Suppose you want to exclude admin pages

if (path.startsWith('/admin')) {

return null

}

// For blog posts, boost priority

if (path.startsWith('/blog/')) {

return {

loc: path,

changefreq: 'daily',

priority: 0.9,

lastmod: new Date().toISOString(),

alternateRefs: config.alternateRefs ?? []

}

}

// default transformation

return {

loc: path,

changefreq: config.changefreq,

priority: config.priority,

lastmod: config.autoLastmod ? new Date().toISOString() : undefined,

alternateRefs: config.alternateRefs ?? []

}

},

additionalPaths: async (config) => {

// Suppose you have pages not automatically discovered

return [

await config.transform(config, '/extra-page'),

{

loc: '/promo',

changefreq: 'monthly',

priority: 0.6,

lastmod: new Date().toISOString()

}

]

},

robotsTxtOptions: {

policies: [

{

userAgent: '*',

allow: '/'

},

{

userAgent: 'bad-bot',

disallow: ['/private', '/user-data']

}

],

additionalSitemaps: [

'https://example.com/my-extra-sitemap.xml'

],

includeNonIndexSitemaps: false // whether to include all sitemap endpoints

}

}

That config would produce:

- A sitemap index file (

sitemap.xml) referencing sub-sitemaps. - Specific

blog/*routes get higher priority and daily changefreq. - Admin pages get excluded entirely.

-

robots.txtthat includes your custom policies and additional sitemaps.

Understanding lastmod, priority, changefreq

These three XML elements are often misunderstood. Here’s how they work and how next-sitemap uses them:

-

autoLastmod: true(default), next-sitemap will set it to the current timestamp (ISO) for all pages. You can override it per path intransformor viaadditionalPaths. -

-

daily,weekly,hourly). It’s a signal, not a binding contract — search engines may ignore if they see no change.

In your config, you can set defaults and then override for specific paths.

Handling large sites: splitting sitemaps & indexing

If your site has many URLs (e.g. 10,000, 100,000), having a single sitemap.xml can be unwieldy. next-sitemap helps via:

-

sitemapSize: sets the max number of URLs per sitemap file. If exceeded, it automatically splits into multiple files (e.g.sitemap-0.xml,sitemap-1.xml). - Then it creates a “index sitemap” (

sitemap.xml) which references all the sub-sitemaps. - If you don’t want that complexity, you can disable index mode with

generateIndexSitemap: false.

This ensures your site’s sitemap stays manageable and within recommended limits.

Server-side / dynamic sitemap support (Next.js 13 / app directory)

One challenge: what if your site has many dynamic pages (e.g. blog posts fetched from a CMS) that aren’t known at build time? next-sitemap provides APIs to generate sitemaps at runtime via server routes.

These APIs include:

-

getServerSideSitemapIndex— to generate an index sitemap dynamically (for the “app directory” in Next 13) -

getServerSideSitemap— to generate a sitemap (list of - Legacy versions (for pages directory) are also supported (e.g.

getServerSideSitemapLegacy)

Example: dynamic index sitemap in app directory

Create a route file like app/server-sitemap-index.xml/route.ts:

import { getServerSideSitemapIndex } from 'next-sitemap'

export async function GET(request: Request) {

// fetch your list of sitemap URLs or paths

const urls = [

'https://example.com/sitemap-0.xml',

'https://example.com/sitemap-1.xml'

]

return getServerSideSitemapIndex(urls)

}

Then in your next-sitemap.config.js, exclude '/server-sitemap-index.xml' from static generation, and add it to robotsTxtOptions.additionalSitemaps so that the generated robots.txt points to this dynamic index.

Example: dynamic sitemap generation

Similarly, for an actual sitemap of dynamic URLs:

In app/server-sitemap.xml/route.ts:

import { getServerSideSitemap } from 'next-sitemap'

export async function GET(request: Request) {

const fields = [

{

loc: 'https://example.com/post/1',

lastmod: new Date().toISOString(),

// optionally, changefreq, priority

},

{

loc: 'https://example.com/post/2',

lastmod: new Date().toISOString()

},

// etc.

]

return getServerSideSitemap(fields)

}

Again, exclude '/server-sitemap.xml' in your static config and add it to robotsTxtOptions.additionalSitemaps.

This way, your dynamic content remains discoverable by crawlers even if not known at build time.

robots.txt: policies, inclusion, additional sitemaps

When generateRobotsTxt: true, next-sitemap will generate a robots.txt file for your site (placed under public/). Some details:

- By default, it will allow all paths to all user agents (

User-agent: * Allow: /) unless you override. - It will include the

Sitemap: …line(s), pointing to your index sitemap (or multiple sitemaps) so that crawlers know where to look. -

You can customize via

robotsTxtOptions:-

policies: an array of policy objects, e.g.:

[ { userAgent: '*', allow: '/' }, { userAgent: 'special-bot', disallow: ['/secret'] } ]-

additionalSitemaps: If you have other sitemaps not auto-generated by next-sitemap (for example an external sitemap), you can include them. -

includeNonIndexSitemaps: from version 2.4.x onwards, this toggles whether all sitemap endpoints (not just the index) are listed in the robots.txt. Setting it tofalsehelps avoid duplicate submissions (index → sub-sitemaps → repeated).

-

Sample robots.txt output

With a config like:

robotsTxtOptions: {

policies: [

{ userAgent: '*', allow: '/' },

{ userAgent: 'bad-bot', disallow: ['/private'] }

],

additionalSitemaps: ['https://example.com/my-custom-sitemap.xml']

}

You might get:

#

User-agent: *

Allow: /

# bad-bot

User-agent: bad-bot

Disallow: /private

# Host

Host: https://example.com

# Sitemaps

Sitemap: https://example.com/sitemap.xml

Sitemap: https://example.com/my-custom-sitemap.xml

Example end-to-end: sample Next.js / next-sitemap workflow

Putting it all together, here’s a possible workflow:

- Install

next-sitemapin your Next.js project. - Create

next-sitemap.config.jswith settings and customize as needed (site URL, policies, exclusions, transforms). - Add

postbuild: next-sitemapin your build script so sitemaps and robots are generated after building. - If you have dynamic content not known at build time, add server-side routes using

getServerSideSitemap/getServerSideSitemapIndex. - Exclude those dynamic sitemap paths from static generation in config, and include them in

robotsTxtOptions.additionalSitemaps. - Deploy your site. The generated

sitemap.xml,sitemap-0.xml, etc., androbots.txtare publicly accessible (e.g.https://yourdomain.com/robots.txt). - Submit your

sitemap.xml(or index) to Google Search Console / Bing Webmaster, so search engines know where to crawl from.

I’ve been building for FreeDevTools.

A collection of UI/UX-focused tools crafted to simplify workflows, save time, and reduce friction in searching tools/materials.

Any feedback or contributors are welcome!

It’s online, open-source, and ready for anyone to use.

👉 Check it out: FreeDevTools

⭐ Star it on GitHub: freedevtools