Introduction

Taking notes during meetings is one of those universal pain points. Most teams either assign someone to jot down minutes, or rely on cloud-based AI note takers like Otter, Fireflies, or Jamie. But these come with trade-offs:

- Your data lives on someone else’s servers.

- Subscription costs scale with team size.

- Integrations can break or require permissions you’d rather not grant.

Meetily was built to solve this problem differently: a self-hosted, open-source desktop app that records, transcribes, and summarizes your meetings completely offline. With the release of v0.0.5, Meetily has taken a big step forward in stability, deployment, and local AI model support.

What Is Meetily?

Meetily is an AI-powered meeting companion that you run locally on your own machine. Unlike SaaS tools that join your calls as bots or plugins, Meetily:

- Captures audio directly from your system output and microphone.

- Works with any platform: Zoom, Teams, Google Meet, Discord, or even in-person recordings.

- No integrations required — no bots, browser extensions, or special permissions.

- Cross-platform: available on Windows, macOS, and Docker (with Linux support on the way).

In other words, Meetily is a standalone desktop app. Once installed, it just works — regardless of which conferencing software your team prefers.

Whisper for Local Transcription

At the heart of Meetily is Whisper, the speech recognition model from OpenAI. Instead of sending audio to a server for transcription, Meetily runs Whisper locally, turning your audio into accurate text right on your machine.

Benefits of local Whisper:

- Privacy-first: no audio leaves your computer.

- Faster feedback: transcription happens in real time or shortly after your call.

- Cost savings: no API usage fees.

This makes Meetily especially useful for professionals working with sensitive information — consulting, law, healthcare, or research.

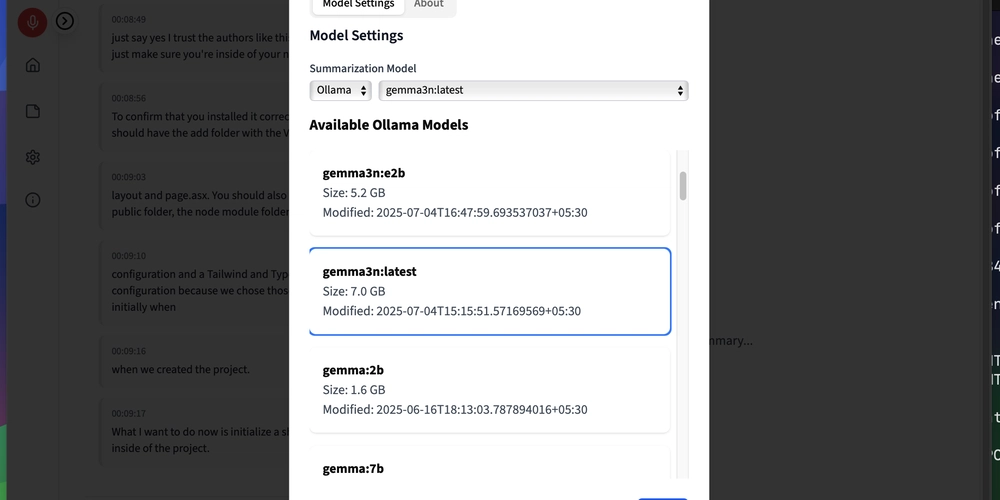

Ollama Summaries: Your Own LLM on Your Machine

Once Whisper has transcribed the conversation, Meetily passes the text to your local Ollama instance for summarization. Ollama acts as a lightweight, local LLM runtime that lets you run advanced models with a simple command.

Supported Models

Meetily works seamlessly with Ollama-supported models, including:

- Gemma 3n — strong summarization capabilities with good performance.

- LLaMA (Meta) — popular general-purpose models.

- Mistral — efficient, lightweight models well-suited for fast local inference.

Because everything runs through Ollama:

- No API keys required (unless you want to connect external providers).

- Everything is local: your transcripts and summaries never leave your machine.

- Customizable: choose the model that fits your hardware and use case.

What’s New in v0.0.5

This release focuses on making Meetily easier to deploy and faster to use:

- Stable Docker support — run Meetily on x86_64 and ARM64 environments reliably.

- Native installers — Windows and macOS builds (with Homebrew tap support for Mac users).

- Streamlined setup — simplified dependency management and installation.

- Backend optimizations — faster Whisper transcription and smoother Ollama summarization pipelines.

These updates make Meetily friendlier for both developers and end users.

Installation Guide (Aligned with Release Notes)

macOS — Install via Homebrew (Recommended)

brew tap zackriya-solutions/meetily

brew install --cask meetily

- Launch Meetily from Applications or Spotlight.

- On first run, grant microphone and screen recording access.

- Start the backend server if required:

meetily-server --language en --model medium

- Upgrading from v0.0.4? Run:

brew update

brew upgrade --cask meetily

brew upgrade meetily-backend

Data is automatically migrated to /opt/homebrew/var/meetily/meeting_minutes.db.

Windows — Installer

Step 1: Install the Frontend Application

-

Download the installer:

- Go to Latest Releases

- Look for and download the file ending with

x64-setup.exe(e.g.,meetily-frontend_0.0.5_x64-setup.exe)

-

Prepare the installer:

- Navigate to your Downloads folder

- Right-click the downloaded

.exefile → Properties - At the bottom, check the Unblock checkbox → Click OK

- This prevents Windows security warnings during installation

-

Run the installation:

- Double-click the installer to launch it

- If Windows shows a security warning:

- Click More info → Run anyway, OR

- Follow any permission dialog prompts

- Follow the installation wizard steps

- The app will be installed and available on your desktop/Start menu

Step 2: Install and Start the Backend

-

Download the backend:

- From the same releases page

- Download the backend zip file (e.g.,

meetily_backend.zip) - Extract the zip to a folder like

C:\meetily_backend\

-

Prepare backend files:

- Open PowerShell (search for it in Start menu)

- Navigate to your extracted backend folder:

cd C:\meetily_backend

- Start the backend services:

.\start_with_output.ps1

- This script will:

- Guide you through Whisper model selection (recommended:

baseormedium) - Ask for language preference (default: English)

- Download the selected model automatically

- Start both Whisper server (port 8178) and Meeting app (port 5167)

- Guide you through Whisper model selection (recommended:

What happens during startup:

- Model Selection: Choose from tiny (fastest, basic accuracy) to large (slowest, best accuracy)

- Language Setup: Select your preferred language for transcription

- Auto-download: Selected models are downloaded automatically (~150MB to 1.5GB depending on model)

- Service Launch: Both transcription and meeting services start automatically

✅ Success Verification:

- Check services are running:

-

Test the application:

- Launch Meetily from desktop/Start menu

- Grant microphone permissions when prompted

- You should see the main interface ready to record meetings

Docker — Cross-Platform

For server environments or those who prefer containers:

Best for: Developers, testing, or when you want automatic dependency management

Performance Note: 20-30% slower than native installation

Quick Start (5-10 minutes)

# Clone repository

git clone https://github.com/Zackriya-Solutions/meeting-minutes

cd meeting-minutes/backend

# Windows (PowerShell)

.\build-docker.ps1 cpu

.\run-docker.ps1 start -Interactive

# macOS/Linux (Bash)

./build-docker.sh cpu

./run-docker.sh start --interactive

What you get:

- Automatic dependency management

- No need to install Python, CMake, or build tools

- Consistent environment across all platforms

- Interactive model selection

- Both services running: http://localhost:8178 and http://localhost:5167

Configuring Ollama

- Install Ollama: https://ollama.com/

- Pull models:

ollama pull gemma:latest

ollama pull llama:latest

ollama pull mistral:latest

- In Meetily → Settings → LLM Provider, set:

- Provider: Ollama (local)

- Base URL:

http://localhost:11434 - Model: e.g.

gemma:latest

Getting Started

- Launch Meetily and select System Audio + Microphone.

- Start recording → Whisper transcribes locally.

- At the end of the meeting, Ollama summarizes into action items and key notes.

- Review meeting history, stored locally on your machine.

Community & Support

Meetily is built in the open, and we welcome feedback, contributions, and bug reports.

Conclusion

With Whisper for transcription and Ollama for local LLM summaries, Meetily offers a fast, private, and fully self-hosted alternative to cloud meeting assistants. Version 0.0.5 brings Docker stability, native installers, and smoother pipelines — making it easier than ever to run AI meeting notes entirely on your own hardware.

If you’ve been waiting for a way to keep your meeting data private, flexible, and under your control, now’s the time to give Meetily a try.