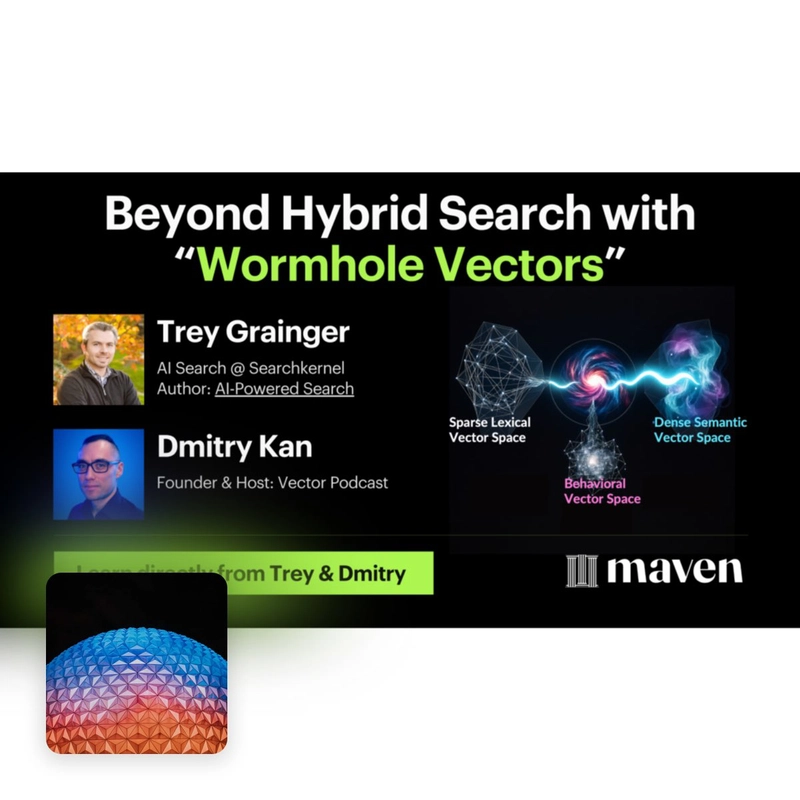

Vector Podcast episode: educational bit

Two weeks ago I had a pleasure to co-host a lightning session together with Trey Grainger on a novel idea in vector search called “Wormhole vectors”.

The approach contrasts itself to a hybrid search approach.

In hybrid search you would convert the same input query into different representations (keywords -> embeddings), run independent queries, and then combine the results.

Wormhole vectors opens a new way to transcend vector spaces of different nature:

- Query in the current vector space

- Find a relevant document set

- Derive a “wormhole” vector to a corresponding region of another vector space

- Traverse to the other vector space with that query

- Repeat as desired across multiple traversals

More specifically, if you come from a sparse space, taking a set of returned documents you can pool their embeddings into one embedding and use it as a “wormhole” into the dense vector space.

If as input you are dealing with a set of embeddings from a vector search, you can traverse the Semantic Knowledge Graph (SKG) to derive a sparse lexical query best representing these documents.

Recording on YouTube:

What you’ll learn:

- What are “Wormhole Vectors”?

Learn how wormhole vectors work & how to use them to traverse between disparate vector spaces for better hybrid search.

- Building a behavioral vector space from click stream data

Learn to generate behavioral embeddings to be integrated with dense/semantic and sparse/lexical vector queries.

- Traverse lexical, semantic, & behavioral vectors spaces

Jump back and forth between multiple dense and sparse vector spaces in the same query

- Advanced hybrid search techniques (beyond fusion algorithms)

Hybrid search is more than mixing lexical + semantic search. See advanced techniques and where wormhole vectors fit in.

Find this episode on many popular platforms:

Spotify: https://open.spotify.com/episode/3fvfbAGQREqCJeUciyRb3r

Apple Podcasts: https://podcasts.apple.com/fi/podcast/trey-grainger-wormhole-vectors/id1587568733?i=1000735675696