Over the last year, you’ve probably seen a wave of AI tools promising to make code review faster. Platforms like CodeRabbit, CodeAnt, and even GitHub Copilot now step in to provide automated review comments when you open a pull request or merge request.

The workflow is familiar:

-

A developer submits a change.

-

The team wants to merge it into

main/master. -

Traditionally, a senior engineer had to go through each line of code, highlight every issue, and leave detailed comments.

That process works — but it comes with hidden costs. Senior reviewers spend valuable time pointing out mundane issues (styling violations, misplaced braces, redundant loops), while the actual developer waits hours or even days for the first round of feedback.

This is exactly where AI code review shines. By providing a first-pass review, it:

-

Gives developers instant feedback without waiting on a human reviewer.

-

Lets senior engineers focus on high-level design, not repetitive nitpicks.

-

Catches a wide spectrum of issues: bugs, security gaps, styling violations, poor organization, and even weak data structure choices.

The end result?

-

Improved velocity — teams move faster without sacrificing quality.

-

Reduced outages and regressions — problems are caught before they hit production.

-

Happier engineers — both the reviewee and the reviewer benefit from a smoother feedback loop.

In short, AI code review has gone from “nice-to-have” to “essential” in modern software development.

The Hidden Cost of Cloud AI Review Tools

There’s no denying the benefits of AI code review — but most of today’s solutions come with a major trade-off: your source code has to leave your infrastructure.

Services like GitHub’s Copilot, CodeRabbit, or CodeAnt process your pull requests by sending the code to their cloud. That means the most valuable asset your startup owns — your proprietary source code — is continuously uploaded to servers you don’t control.

For startups, this creates four big risks:

-

IP leakage → Your competitive edge is your codebase. Handing it over to an external service introduces the risk of leaks, either accidental or malicious.

-

Data compliance headaches → Regulations like GDPR and SOC 2 require strict control over where code and data live. Relying on third-party servers complicates compliance.

-

Vendor lock-in → VC-backed companies have to chase revenue. Once you’re dependent on their tool, you’re at the mercy of their pricing model.

-

Aggressive upselling → Many cloud services start cheap, but costs can balloon quickly. For a small team, that’s not sustainable.

So while cloud-based AI review tools look appealing at first, the hidden costs pile up. And for an early-stage startup trying to protect IP, control costs, and stay nimble — those costs can outweigh the benefits.

👉 This is where self-hosted AI code review becomes a serious alternative.

A Better Way: Secure, Self-Hosted AI Code Review with Ollama

Instead of sending your code to someone else’s cloud, you can run AI reviews on your own infrastructure with Ollama.

Ollama is an open-source tool that makes it simple to run and serve AI models locally. That means all the benefits of AI code review — without giving up control over your codebase.

Even better, the requirements are minimal:

For startups, the advantages are huge:

-

Keep code private → Your code never leaves your infrastructure.

-

Affordable → No per-seat licensing or SaaS markup. Just pay for your own server.

-

Flexible → Works seamlessly with GitHub, GitLab, and Bitbucket — whether you use the cloud versions or self-hosted instances.

When paired with LiveReview, Ollama integrates directly into your workflow. Every pull request or merge request automatically triggers a review, giving your team instant, secure, first-pass feedback — without relying on any external vendor.

In other words, you get the speed of AI code review and the peace of mind of self-hosting.

How To: Deploying Secure AI Code Review in Your Workflow

Part 1: Setup Ollama API with Mistral:7b Model

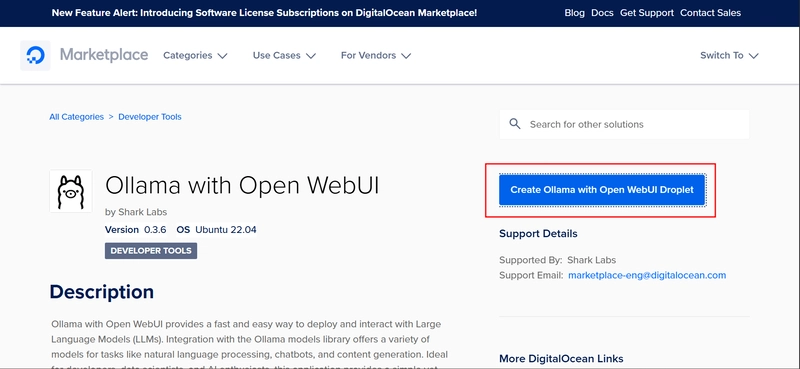

Ollama with Open WebUI \ DigitalOcean Marketplace 1-Click App:

Pick a Region

Pick 8 CPU if possible; if not possible – pick 4 or 2 CPU

Reserve an IP

Get access

Get Chat UI

Download model

Select Model

Test it once

Goto Settings

Copy JWT

Now try something like this:

curl -X GET "http:///ollama/api/tags" \

-H "Authorization: Bearer "

I get something like:

{

"models": [

{

"name": "mistral:7b",

"model": "mistral:7b",

"modified_at": "2025-08-15T05:40:32.387804603Z",

"size": 4372824384,

"digest": "6577803aa9a036369e481d648a2baebb381ebc6e897f2bb9a766a2aa7bfbc1cf",

"details": {

"parent_model": "",

"format": "gguf",

"family": "llama",

"families": [

"llama"

],

"parameter_size": "7.2B",

"quantization_level": "Q4_K_M"

},

"urls": [

0

]

},

{

"name": "tinyllama:latest",

"model": "tinyllama:latest",

"modified_at": "2024-08-26T18:30:10.907600469Z",

"size": 637700138,

"digest": "2644915ede352ea7bdfaff0bfac0be74c719d5d5202acb63a6fb095b52f394a4",

"details": {

"parent_model": "",

"format": "gguf",

"family": "llama",

"families": [

"llama"

],

"parameter_size": "1B",

"quantization_level": "Q4_0"

},

"urls": [

0

]

}

]

}

OK, now try to get a response from mistral:7b model

curl -X POST "http:///ollama/api/generate" -H "Authorization: Bearer " -H "Content-Type: application/json" -d '{ "model": "mistral:7b", "prompt": "Explain quan

tum computing in simple terms.", "stream": false }'

Part 2: Connect to LiveReview and Trigger Review

Join LiveReview Waitlist – our team will give your team early access within a day

You should have access to LiveReview:

Goto “Git Providers” and select your code hosting platform

Acquire a PAT token for a dedicated user and connect:

Now goto “AI Providers” and pick Ollama

Fill up the details for Ollama:

Trigger New Review:

This will give summary and issue comments in your MR thread

Why This Matters for Startups

For an early-stage startup, every commit counts. You need the speed of modern tools, but you also can’t afford to take risks with your IP or get trapped in unpredictable SaaS pricing. That’s where self-hosted AI code review with Ollama really shines.

-

Faster iteration without leaking IP → Your team gets immediate AI-powered feedback on every pull request, without ever sending sensitive code to external servers.

-

Predictable infrastructure costs → Instead of paying per seat or per PR to a SaaS vendor, you control costs with your own server.

-

Independence from VC-backed SaaS → You’re not at the mercy of aggressive pricing models or feature gates designed to maximize revenue.

-

Empowered engineers → Your team gets private, secure AI support that improves velocity, reduces outages, and lets senior devs focus on meaningful work.

In short: this is AI code review for startups — secure, affordable, and tailored for the way small teams actually build software.

What Next?

AI code review is too valuable to ignore. It improves code quality, accelerates reviews, and makes developers more effective. But you don’t have to hand your source code to the cloud to get these benefits.

With Ollama + LiveReview, you can run secure, self-hosted AI code review on your own infrastructure — keeping your code private while enjoying all the advantages of AI-powered development.

👉 Ready to try it out?

-

Follow our setup guide to deploy Ollama in minutes.

-

Connect it with LiveReview and your GitHub, GitLab, or Bitbucket repos.

-

Start reviewing code faster, securely, and on your own terms.

Your team gets the speed of AI. Your code stays yours.