Two weeks ago, I was on a customer call that was supposed to be about margin alerts which is a feature we’re building. Standard stuff. They wanted to know when their agent costs spiked.

Then their engineer shared their screen and said something that’s been rattling around in my brain ever since:

“Look at this. Every time our agent handles a refund request, it pulls the customer’s entire purchase history, checks seven different policies, and generates a legal audit trail. But when it suggests a product recommendation? Just a simple embedding search. The refund costs us $3.40. The upsell costs $0.03. We charge the same for both…”

Why we built an agent monetization platform

Originally, we built Paid.ai to solve a simple problem: AI agents are black holes for money. You need to know what things cost before you go bankrupt. Track costs, set limits, optimize margins. Basic unit economics.

But this customer had figured out something else entirely which really made a difference – by instrumenting their costs, they’d accidentally instrumented their entire business intelligence. The cost data was just a proxy for something much more interesting: operational complexity.

High cost operations = high value interactions.

Low cost operations = commodity features.

Their most expensive customers were their most loyal. Their cheapest customers were tourists who didn’t stick around.

This matters more than we thought

Paid sits at a unique point in the stack. Owing to Paid’s agentic signals architecture, we see every token, every API call, every tool invocation. We built this to track costs, but what we’re actually tracking is the entire lifecycle of value creation.

When your agent handles a customer request, we see:

- How many steps it took

- Which tools it used

- How many retries and fallbacks

- The total resource consumption

But that’s just infrastructure.

What we’re really seeing is:

- Problem complexity

- Solution sophistication

- Value being created

- Trust being built (or destroyed)

We thought we were building a billing system for AI agents. Turns out we’re building the business intelligence layer for agents.

The pattern emerged

After that call, I started looking at our own data differently. Started asking different questions.

The patterns are everywhere once you look. I took the ~250 calls I had recorded and browsed them again (with Claude’s help – projects are a LIFESAVER).

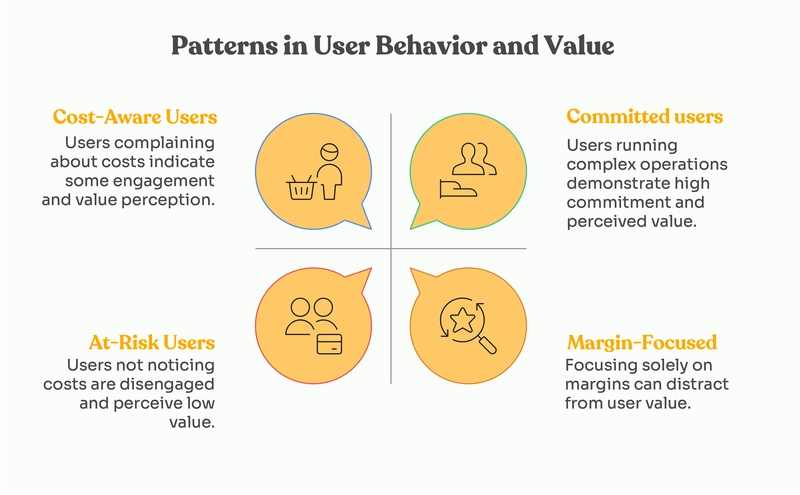

Pattern 1: Complexity correlates to commitment

Users whose agents run complex, multi-step operations stick around.

Sure, the operations are complex, but those complex operations mean they’ve integrated deeply into actual workflows.

They’re not testing. They’re depending.

Pattern 2: Cost spikes are often a type of PMF

When customers complain about costs, that’s good. It means they’re getting value.

When they don’t use enough to even notice costs? They’re already gone, they just haven’t canceled yet.

Pattern 3: The Margin story can be a distraction

True, everyone’s freaking out about negative margins. We do too.

But margins only matter if you’re pricing on costs.

If you price on value, and you can see the value in the operations data, margins become a solved problem.

We’re sitting on a goldmine

This is the part where I’m supposed to be humble, but fuck it.

We’re sitting on something special.

Every other tool in the agent stack sees only their slice:

- Model providers see tokens in and out

- Orchestration frameworks see flow control

- Observability tools see errors and latency

We see the money. And money is the ultimate truth-teller. When someone routes a request through Paid, we don’t just track what it costs. We see:

- What they were willing to pay for

- What complexity they were willing to tolerate

- What value justified that cost

Yes, we built a cost tracker.

But costs get tracked through signals of operations.

And operations are just business logic made visible.

Did you know that you have this data too?

Every AI company is sitting on this data, but many don’t know it or can’t access it.

They’re so focused on the AI race – better models, lower latency, more features – that they’re missing the business intelligence goldmine in their own logs.

Your agent’s runtime is telling you:

- Which features actually matter

- Which customers will upgrade

- Which use cases have product-market fit

- What your business actually does (vs what you think it does)

But most teams are just looking at costs and trying to make them go down.

That’s like having a gold mine and only caring about your electricity bill.

The agent economy needs more intelligence

We started Paid.ai because the agentic economy needed basic financial infrastructure. Track costs, manage margins, don’t go broke.

But what we discovered is that the cost layer is actually the intelligence layer.

Every dollar spent is a decision made.

Every API call is a value proposition.

Every operation is a business process made visible.

We built a cost tracker. It turned into a crystal ball.

And that customer who started this whole revelation? They’ve cut costs by 60% while increasing prices by 3x. Not because they optimized their infrastructure. Because they finally understood what their customers actually valued.

They’re not paying for AI. They’re paying for outcomes. The operations data shows you exactly which outcomes matter.

That’s the real insight: In the agentic economy, your costs aren’t your problem. They’re your roadmap.