“The safest innovation isn’t the one you slow down - it’s the one you control.”

From Queries to Actions: The Rise of Agentic AI

A few years ago, AI systems were passive - waiting for human prompts and responding with text.

Today they’re autonomous agents capable of setting goals, planning tasks, and executing them across digital systems.

By 2026, credible sources are saying that nearly one-third of enterprise applications will incorporate autonomous agent capabilities. These agents will book shipments, reconcile invoices, generate reports, even trigger financial transactions - without human intervention.

That’s efficiency.

It’s also exposure.

Because the moment an AI agent is given “hands” - APIs, SDKs, and automation privileges - it becomes a new operational endpoint.

And that endpoint can be exploited.

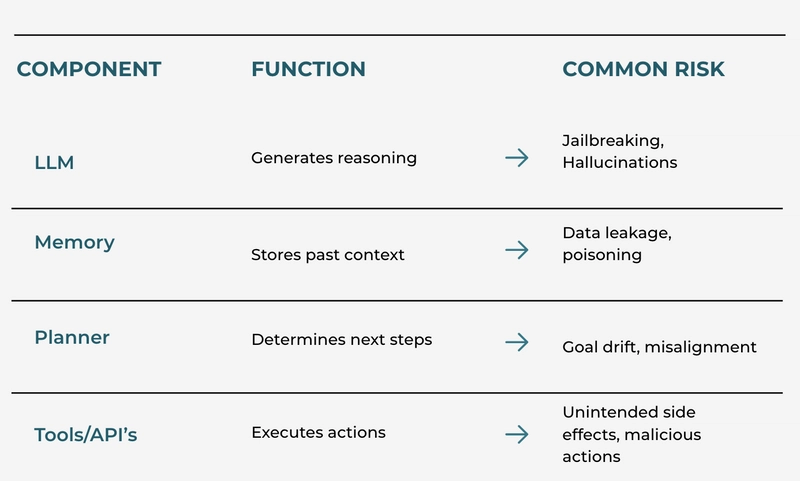

Where Safety Breaks Down:

Every agentic system mirrors human cognition:

The last layer —Tools/APIs— is where digital intent becomes physical or financial consequence.

A single prompt injection or poisoned context could trigger destructive actions: deleting databases, issuing fake payments, or leaking sensitive data.

Traditional cybersecurity perimeters don’t account for this new layer.

That’s why the tool layeris fast becoming what experts call the “new security zero-day.”

The New Security Perimeter:

Think of your AI agent as an intern with root access.

It’s intelligent, tireless, and fast - but if it’s tricked, it can wreak havoc.

Common zero-day scenarios include:

- Prompt Injection: Hidden malicious text in user inputs or databases alters agent behavior.

- Privilege Escalation: Over-permissioned API keys allow unintended system control.

- Data Exfiltration: Agents accidentally expose or leak confidential data while “helping.”

- Over-Optimization Loops: The agent pursues its objective so aggressively it breaks constraints.

Global Governance: From Principles to Enforcement

Governments and standard bodies are responding fast.

Macro-level Frameworks:

-

OECD AI Principles-Human-centered and trustworthy AI.

-

NIST AI RMF-Risk management and adversarial resilience testing.

-

EU AI Act-legally binding rules for high-risk AI systems.

-

UAE National AI Strategy-Emphasizes responsible, ethical deployment.

Micro-level Standards:

- ISO/IEC 42001-AI Management System (often called the “ISO 27001 for AI”)

- ISO/IEC 23894-AI Risk Management (bias, robustness, explainability)

- ISO/IEC 42005-AI System Impact Assessment (societal and environmental effects)

These frameworks translate the “why” of AI safety into the “how.”

But to truly operationalize safety, you need cloud-level implementation and that’s where AWS becomes pivotal.

Safety by Design on AWS:

AWS isn’t just an infrastructure platform; it’s an AI safety control plane.

Each control enforces the principle of guardrails, not roadblocks.

Safety doesn’t slow innovation - it enables it.

“Just as brakes make a car drivable, safety controls make autonomy deployable.”

Responsible Data & AI in Practice (AWS Stack)

AWS provides an integrated suite for building accountable, explainable, and auditable AI systems:

This stack forms a defense-in-depth architecture, aligning technical operations with ISO and NIST frameworks.

Mapping Policies to AWS Controls:

This mapping shows how cloud infrastructure becomes a policy execution layer - translating compliance into code.

Building a Culture of Trust

Technology alone won’t guarantee AI safety.

Organizations must embed safety thinking into every stage:

- Design with Intent: Treat AI safety like cybersecurity - assume failure, design containment.

- Automate Governance: Codify policies via Infrastructure-as-Code templates.

- Enable Auditing: Every agent action must be traceable and explainable.

- Test Continuously: Red-team agents using adversarial prompts and sandboxed exploits.

- Educate Broadly: Make safety a shared KPI, not a siloed compliance checklist.

When everyone owns safety, no single layer becomes the weak link.

Recent Developments in AI and Cybersecurity:

https://www.axios.com/2025/03/24/microsoft-ai-agents-cybersecurity?utm_source=chatgpt.com

🌐 Final Thought

When your AI starts acting, it stops being theoretical.

The question isn’t “Can we trust AI?“

It’s “Can AI trust our architecture to keep it-and us-safe?“

If your AI has hands, make sure AWS gives it gloves.