Table of Contents

- The Rise of LLMs: From Science Fiction to Reality

- Understanding Large Language Models: The Building Blocks

- The Problem: Why Building LLM Apps is Challenging

- Enter LangChain: The Framework That Changes Everything

- The Evolution of LangChain: From Chains to LCEL

- The LangChain Ecosystem: A Complete Platform

- Why LangChain Dominates Other Frameworks

- A World Without LangChain: The Standardization Problem

- Understanding LLM Limitations and How LangChain Solves Them

- Getting Started: Setting Up with Three Major Providers

- Your Learning Path: What Comes Next

- Conclusion: Your Journey into AI Development Begins

The Rise of LLMs: From Science Fiction to Reality {#rise-of-llms}

The other day, I was explaining to my nephew how artificial intelligence works, and I realized something profound: what seemed like science fiction just five years ago is now powering the apps we use daily. From ChatGPT helping students with homework to GitHub Copilot writing code alongside developers, Large Language Models have quietly revolutionized how we interact with technology.

But here’s what most people don’t realize: the real revolution isn’t just in chatbots. It’s in the shift from traditional software that follows rigid rules to intelligent applications that can understand context, make decisions, and adapt to user needs in real-time.

The Exponential Growth of AI Applications

In 2024, developers leaned into complexity with multi-step agents, sharpened efficiency by doing more with fewer LLM calls, and added quality checks to their apps using methods of feedback and evaluation. This shift represents something unprecedented in software development: we’re moving from applications that simply execute commands to applications that can reason, plan, and solve complex problems independently.

Consider this progression: in 2020, most developers had never heard of GPT. By 2022, they were experimenting with OpenAI’s API. Today, they’re building sophisticated AI agents that can research topics, write reports, manage databases, and even debug their own code. We’re witnessing the emergence of what I call “thinking software.”

Understanding Large Language Models: The Building Blocks {#understanding-llms}

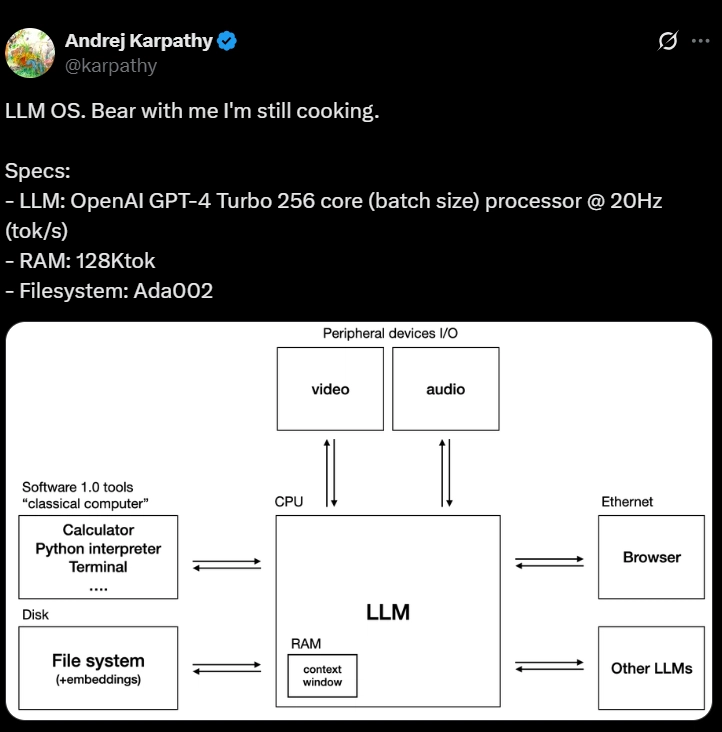

Remember Andrej Karpathy (Role model in Ai) tweet about LLM’s being the new OS?😭

Before we dive into LangChain, you need to understand what Large Language Models actually are and why they’re revolutionary. Think of an LLM as a sophisticated pattern recognition system trained on vast amounts of text data.

What Makes LLMs Different

Traditional software works like a recipe: if condition A is met, do action B. LLMs work more like an experienced chef who understands ingredients, techniques, and can create new dishes based on understanding patterns in cooking rather than following rigid instructions.

When you ask an LLM “Write a marketing email for a fitness app,” it doesn’t retrieve a template. Instead, it generates text by understanding patterns of what marketing emails look like, what fitness apps typically promote, and how persuasive language works. This pattern-based generation is what makes LLMs so powerful and versatile.

The Three Types of Intelligence LLMs Possess

Linguistic Intelligence: LLMs understand language structure, grammar, context, and can generate human-like text. They can translate between languages, summarize complex documents, and maintain conversation context across multiple exchanges.

Contextual Intelligence: LLMs can understand the broader context of a conversation or task. They remember what you discussed earlier and can build upon previous information to provide more relevant responses.

Reasoning Intelligence: Modern LLMs demonstrate emergent reasoning abilities. They can break down complex problems, perform multi-step calculations, and even engage in logical deduction—though with important limitations we’ll discuss later.

The Problem: Why Building LLM Apps is Challenging {#the-problem}

Now here’s where most developers hit their first wall: while LLMs are incredibly powerful, building applications with them is surprisingly complex. Let me explain why through a real scenario.

The Hidden Complexity

Imagine you want to build a simple research assistant that can answer questions about your company’s documents. Sounds straightforward, right? Here’s what you actually need to handle:

Memory Management: LLMs don’t remember previous conversations by default. You need to manually manage conversation history, decide what to keep and what to discard, and handle the token limits that constrain how much context you can provide.

Integration Complexity: Your research assistant needs to work with different document formats (PDFs, Word docs, web pages), connect to various LLM providers (OpenAI, Google, Anthropic), and potentially integrate with databases, search engines, and other APIs.

Prompt Engineering: Crafting effective prompts is both art and science. You need different prompting strategies for different tasks, error handling when LLMs produce unexpected outputs, and ways to ensure consistent behavior across different interactions.

Data Processing Pipeline: Documents need to be loaded, split into manageable chunks, converted into searchable formats, and retrieved efficiently when users ask questions. This involves understanding vector embeddings, similarity search, and information retrieval concepts.

The Development Bottleneck

Before LangChain, developers spent roughly 80% of their time on infrastructure and plumbing, leaving only 20% for the actual AI logic. This created what I call the “AI development paradox”: the more powerful the underlying models became, the more complex it became to harness their power in real applications.

Most developers found themselves reinventing the same patterns over and over: conversation management, prompt templates, model switching, error handling, and result parsing. Every project started with weeks of boilerplate code before any real AI functionality could be built.

Enter LangChain: The Framework That Changes Everything {#enter-langchain}

LangChain is the platform developers and enterprises choose to build gen AI apps from prototype through production. But what does this actually mean for you as a developer?

The Philosophy Behind LangChain

LangChain was built around a simple but powerful philosophy: AI application development should focus on the unique value you’re creating, not on the repetitive infrastructure work. The framework provides pre-built, tested components for all the common patterns in AI development, allowing you to compose them like LEGO blocks to create sophisticated applications.

Think of LangChain as the jQuery of AI development. Just as jQuery simplified JavaScript DOM manipulation and AJAX calls, LangChain simplifies LLM integration, conversation management, and AI workflow orchestration.

Core Design Principles

Modularity: Every component in LangChain is designed to work independently or as part of larger systems. You can use LangChain’s conversation memory without using its document loaders, or vice versa.

Composability: Components are designed to work together seamlessly. This means you can combine a document loader, an embedding model, a vector store, and a language model with just a few lines of code.

Standardization: LangChain provides consistent interfaces across different LLM providers, vector databases, and document formats. This means you can switch from OpenAI to Google Gemini with minimal code changes.

The Five Core Abstractions

LangChain organizes AI development around five fundamental abstractions that every AI application needs:

Models: The interface to different LLM providers (OpenAI, Google, Anthropic, local models). LangChain handles authentication, rate limiting, error handling, and provides consistent interfaces across providers.

Prompts: Templates and strategies for crafting effective prompts. Instead of hardcoding prompts, you create reusable templates that can adapt to different contexts and inputs.

Chains: Sequences of operations that can be composed together. A chain might load a document, extract key information, summarize it, and then answer questions based on that summary.

Memory: Systems for maintaining conversation context and application state. LangChain provides different memory strategies optimized for different use cases and token budgets.

Agents: AI systems that can make decisions about what actions to take. Agents can use tools, access external APIs, and create dynamic workflows based on user inputs and context.

Where to place this diagram: After explaining the five core abstractions, to show how they create a layer between your application and the underlying AI services.

The Evolution of LangChain: From Chains to LCEL {#langchain-evolution}

Understanding LangChain’s evolution helps you appreciate why it’s become the dominant framework for AI development. Let me walk you through this journey and explain how it affects your learning path.

The Early Days: Chain-Based Architecture

LangChain initially focused on the concept of “chains” – sequential operations that could be connected together. If you wanted to translate text and then summarize it, you would create a translation chain and connect it to a summarization chain. This was powerful but had limitations in terms of flexibility and performance optimization.

The LCEL Revolution

The LangChain Expression Language (LCEL) takes a declarative approach to building new Runnables from existing Runnables. This means that you describe what should happen, rather than how it should happen, allowing LangChain to optimize the run-time execution of the chains.

In the third quarter of 2023, the LangChain Expression Language (LCEL) was introduced, which provides a declarative way to define chains of actions. This represents a fundamental shift in how AI applications are built.

Why LCEL Matters for Beginners

LCEL introduces a pipe operator (|) that allows you to chain operations in a natural, readable way. More importantly, LCEL automatically handles many complex aspects of AI application development:

Automatic Optimization: LCEL can run operations in parallel when possible, reducing latency and improving user experience.

Built-in Error Handling: LCEL provides robust error handling and retry logic, making your applications more reliable.

Streaming Support: LCEL supports streaming responses by default, allowing users to see results as they’re generated rather than waiting for complete responses.

Observability: LCEL integrates seamlessly with LangSmith for monitoring and debugging, giving you insights into how your applications perform in production.

This evolution means that as a beginner, you’re learning a mature, production-ready framework rather than an experimental tool. The patterns you learn today will remain relevant as the framework continues to evolve.

The LangChain Ecosystem: A Complete Platform {#langchain-ecosystem}

LangChain’s suite of products supports developers along each step of their development journey. Understanding this ecosystem is crucial because it shows you’re not just learning a library—you’re entering a complete platform for AI development.

LangChain Core: The Foundation

LangChain Core provides the essential abstractions and interfaces for AI application development. This is where you’ll spend most of your time as a beginner, learning to work with models, prompts, and chains.

LangSmith: Development and Production Monitoring

LangSmith is a unified observability & evals platform where teams can debug, test, and monitor AI app performance — whether building with LangChain or not. Think of LangSmith as the Chrome DevTools for AI applications.

LangSmith is framework agnostic — you can use it with or without LangChain’s open source frameworks langchain and langgraph. This means the debugging and monitoring skills you learn with LangSmith will be valuable regardless of what framework you use in the future.

LangServe: From Development to Deployment

LangServe helps developers deploy LangChain runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. This solves one of the biggest challenges in AI development: going from a working prototype to a deployed application that users can actually access.

The Integration Advantage

What makes this ecosystem powerful is how these components work together. You can develop an application using LangChain Core, debug and optimize it using LangSmith, and deploy it using LangServe—all with consistent patterns and minimal configuration.

LangSmith and LangServe, in essence, function as the monitoring and deployment arms of LangChain. They work in tandem to ensure not only the smooth creation of your app but also its efficient deployment and continuous improvement.

Why LangChain Dominates Other Frameworks {#why-langchain-dominates}

Having worked with various AI frameworks, I can tell you that LangChain’s dominance isn’t accidental. Let me explain why it consistently outperforms alternatives and why learning it is a strategic career decision.

Ecosystem Maturity and Stability

While newer frameworks often promise simplicity, they lack the battle-tested reliability that comes from widespread adoption. LangChain has been stress-tested by thousands of developers building production applications. The patterns and best practices embedded in the framework represent collective wisdom from real-world AI development.

Provider Agnosticism

One of LangChain’s greatest strengths is its provider-agnostic design. You can start developing with one LLM provider and switch to another with minimal code changes. This is crucial because the AI landscape is rapidly evolving, and the best provider today might not be the best provider tomorrow.

Community and Learning Resources

LangChain benefits from the largest community in AI development, which means better documentation, more tutorials, active forums for getting help, and a larger pool of developers to learn from and hire. When you encounter a problem building with LangChain, chances are someone else has solved it before.

Enterprise Adoption

Major companies choose LangChain for their AI initiatives, which creates more job opportunities and ensures long-term support for the framework. Learning LangChain isn’t just about personal projects—it’s about building skills that are directly applicable in professional environments.

Future-Proofing

LangChain’s architecture is designed to adapt to new AI capabilities as they emerge. The framework has successfully evolved from simple sequential chains to complex agentic workflows while maintaining backward compatibility. This means the time you invest learning LangChain will remain valuable as AI technology continues to advance.

A World Without LangChain: The Standardization Problem {#world-without-langchain}

To truly understand LangChain’s value, let me paint a picture of AI development without standardized frameworks. This will help you appreciate why LangChain has become essential for professional AI development.

The Tower of Babel Problem

Without LangChain, every AI project becomes a custom implementation. Developers create their own conventions for prompt management, their own patterns for conversation memory, and their own integrations for different LLM providers. This leads to what I call the “Tower of Babel” problem—teams can’t easily collaborate, share code, or learn from each other because everyone speaks a different “language.”

The Maintenance Nightmare

Custom AI implementations require ongoing maintenance as LLM providers update their APIs, new models are released, and requirements change. Without a framework like LangChain, developers spend significant time maintaining infrastructure code rather than building features that add value to their applications.

The Knowledge Transfer Challenge

When developers leave teams or projects, they take their custom AI implementation knowledge with them. New team members must learn project-specific patterns rather than leveraging standardized approaches. This creates significant onboarding friction and knowledge silos within organizations.

The Standardization Benefits

LangChain solves these problems by providing standard patterns that work across teams, projects, and organizations. When you join a new project that uses LangChain, you immediately understand the architecture because it follows established conventions. When you want to integrate a new LLM provider, you know it will work with your existing code because LangChain provides consistent interfaces.

This standardization is similar to how web frameworks like React or Django transformed their respective domains. They didn’t just make individual projects easier to build—they created shared languages that enabled entire ecosystems to flourish.

Understanding LLM Limitations and How LangChain Solves Them {#llm-limitations}

Every AI developer needs to understand the fundamental limitations of Large Language Models and how frameworks like LangChain help address these challenges. This understanding is crucial for building reliable AI applications.

Limitation 1: Context Window Constraints

LLMs have limited context windows—they can only consider a certain amount of text when generating responses. For most models, this ranges from 4,000 to 200,000 tokens (roughly 3,000 to 150,000 words). This creates challenges when working with large documents or long conversations.

How LangChain Solves It: LangChain provides memory management systems that intelligently summarize older parts of conversations, document splitting strategies that break large texts into manageable chunks, and retrieval systems that find the most relevant information to include in the context window.

Limitation 2: Knowledge Cutoffs and Hallucinations

LLMs are trained on data up to a specific cutoff date and sometimes generate plausible-sounding but incorrect information (hallucinations). They also can’t access real-time information or update their knowledge based on new events.

How LangChain Solves It: LangChain enables Retrieval Augmented Generation (RAG), which allows LLMs to access external knowledge sources. It provides tools for web search, database queries, and document retrieval, enabling LLMs to work with current and accurate information rather than relying solely on training data.

Limitation 3: Lack of Tool Use and Action Capabilities

Base LLMs can only generate text—they can’t perform calculations, access databases, send emails, or interact with external systems. This severely limits their practical applications.

How LangChain Solves It: LangChain’s agent framework allows LLMs to use tools and perform actions. Agents can decide when to use calculators for math problems, when to search the web for current information, when to query databases, and how to chain multiple tools together to solve complex problems.

Limitation 4: Inconsistent Output Formats

LLMs often produce responses in varying formats, making it difficult to integrate their outputs into applications that expect structured data.

How LangChain Solves It: LangChain provides output parsers that can structure LLM responses into consistent formats, prompt templates that encourage consistent output formats, and validation systems that ensure responses meet application requirements.

The Emergent Capabilities

When these solutions work together, something powerful emerges: LLMs become the “reasoning engine” in applications that can access external knowledge, use tools, maintain context, and produce structured outputs. This transforms LLMs from impressive text generators into practical AI assistants capable of solving real-world problems.

Getting Started: Setting Up with Three Major Providers {#getting-started}

Now that you understand the why behind LangChain, let’s get you set up with the three most important LLM providers in today’s ecosystem. Each provider has distinct advantages, and understanding when to use each one will make you a more effective AI developer.

Understanding the Provider Landscape

The choice of LLM provider significantly impacts your application’s performance, cost, and capabilities. Let me explain the strengths and ideal use cases for each major provider.

Provider 1: OpenAI – The Pioneer

Strengths: OpenAI offers the most mature and widely-tested models with consistent performance across diverse tasks. GPT-4 remains the gold standard for complex reasoning tasks, while GPT-3.5 Turbo provides excellent performance at lower costs. OpenAI’s API is highly reliable with extensive documentation.

Ideal For: Production applications requiring consistent high-quality outputs, complex reasoning tasks, applications where cost is less critical than performance, and scenarios where you need proven reliability.

Considerations: Higher costs compared to alternatives, API dependency creates vendor lock-in, and rate limits can be restrictive for high-volume applications.

Provider 2: Google Gemini – The Multimodal Leader

Strengths: Google’s Gemini models excel at multimodal tasks (combining text, images, and code), offer generous free tiers perfect for learning and experimentation, and provide competitive performance at lower costs. Gemini 1.5 Pro offers one of the largest context windows available (up to 1 million tokens).

Ideal For: Applications involving images and text together, projects with budget constraints, applications requiring large context windows, and developers learning AI development who want extensive free usage.

Considerations: Newer ecosystem with fewer third-party integrations, performance can be inconsistent across different task types, and availability varies by geographic region.

Provider 3: Groq – The Speed Champion

Strengths: Groq provides incredibly fast inference speeds (often 10x faster than traditional providers), excellent performance for real-time applications, and competitive pricing for high-volume usage. Their custom hardware acceleration makes them ideal for latency-sensitive applications.

Ideal For: Real-time chat applications, high-volume API usage scenarios, applications where response speed is critical, and cost-sensitive production deployments.

Considerations: Limited model selection compared to OpenAI or Google, newer provider with smaller ecosystem, and less extensive documentation and community resources.

Setting Up Your Development Environment

Let’s get you configured with all three providers so you can experiment and find what works best for your projects.

Step 1: Install Required Packages

# Create a new Python environment

python -m venv langchain_env

source langchain_env/bin/activate # On Windows: langchain_env\Scripts\activate

# Install LangChain with support for all three providers

pip install langchain-openai langchain-google-genai langchain-groq python-dotenv

Step 2: Obtain API Keys

For OpenAI: Visit platform.openai.com, create an account, and generate an API key. New users typically receive $5 in free credits.

For Google Gemini: Visit Google AI Studio, sign in with your Google account, and create an API key. The free tier provides 1,500 requests per day.

For Groq: Visit console.groq.com, create an account, and generate an API key. They offer generous free tier limits for testing.

Step 3: Configure Environment Variables

# Create .env file for secure API key storage

echo "OPENAI_API_KEY=your_openai_api_key_here" > .env

echo "GOOGLE_API_KEY=your_google_gemini_api_key_here" >> .env

echo "GROQ_API_KEY=your_groq_api_key_here" >> .env

Step 4: Test Your Setup

# test_providers.py

import os

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

# Test OpenAI

try:

from langchain_openai import ChatOpenAI

openai_llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0.1)

openai_response = openai_llm.invoke("Say 'OpenAI connection successful!'")

print("OpenAI:", openai_response.content)

except Exception as e:

print("OpenAI setup issue:", e)

# Test Google Gemini

try:

from langchain_google_genai import ChatGoogleGenerativeAI

gemini_llm = ChatGoogleGenerativeAI(model="gemini-1.5-flash", temperature=0.1)

gemini_response = gemini_llm.invoke("Say 'Gemini connection successful!'")

print("Gemini:", gemini_response.content)

except Exception as e:

print("Gemini setup issue:", e)

# Test Groq

try:

from langchain_groq import ChatGroq

groq_llm = ChatGroq(model="mixtral-8x7b-32768", temperature=0.1)

groq_response = groq_llm.invoke("Say 'Groq connection successful!'")

print("Groq:", groq_response.content)

except Exception as e:

print("Groq setup issue:", e)

Run the test:

python test_providers.py

If you see success messages from all three providers, you’re ready to start building AI applications! If any provider fails, double-check your API keys and internet connection.

Choosing the Right Provider for Your Projects

As you progress through your learning journey, you’ll develop intuition for when to use each provider. Start with Google Gemini for learning (generous free tier), experiment with Groq for performance-critical applications, and consider OpenAI for production applications requiring consistent, high-quality outputs.

Recommended Learning Resources

Documentation: Start with the official LangChain documentation, which is comprehensive and regularly updated. The conceptual guides provide excellent background on AI development patterns.

Hands-On Practice: Build projects that solve real problems you encounter in your daily life or work. The best learning comes from tackling challenges that matter to you personally.

Community Engagement: Join the LangChain Discord community where you can ask questions, share projects, and learn from other developers’ experiences.

Conclusion: Your Journey into AI Development Begins {#conclusion}

We’ve covered significant ground in this comprehensive introduction to LangChain. You now understand not just what LangChain is, but why it exists, how it solves fundamental problems in AI development, and where it fits in the broader landscape of artificial intelligence.

The Bigger Picture

Learning LangChain isn’t just about mastering a particular framework—it’s about developing the skills and mindset needed to build intelligent applications in an AI-driven world. The concepts you’ll learn with LangChain—prompt engineering, memory management, tool integration, and agentic workflows—are fundamental to AI development regardless of which specific tools you use.

Why This Matters for Your Career

The skills you develop with LangChain are directly applicable to the fastest-growing segment of software development. Companies across every industry are looking for developers who can build AI-powered applications, and LangChain proficiency is increasingly becoming a requirement rather than a nice-to-have skill.

Your Next Steps

With your development environment set up and your learning path mapped out, you’re ready to start building. Remember that becoming proficient with LangChain is a journey, not a destination. The framework continues to evolve, new capabilities are regularly added, and the broader AI landscape is rapidly changing.

Start with simple projects, focus on understanding the underlying concepts rather than just copying code, and don’t hesitate to experiment and make mistakes—that’s where the real learning happens.

Looking Forward

In our next module, we’ll dive deep into models and prompt engineering. You’ll learn how to craft prompts that consistently produce the outputs you need, understand the nuances of different model types, and build your first complete LangChain application.

The journey into AI development is one of the most exciting paths you can take in technology today. LangChain gives you the tools to turn your ideas into reality, and now you have the foundation to start building.

Ready to start building? Drop a comment below about what type of AI application you’re most excited to create, and don’t forget to follow me for Module 2 where we’ll build your first real LangChain project!

Tags: #LangChain #AI #Python #MachineLearning #Tutorial #Beginners #LLM #OpenAI #GoogleGemini #Groq #Development

This is Module 1 of our comprehensive LangChain series. Each module builds on the previous one, taking you from complete beginner to production-ready AI developer. Subscribe to get notified when new modules are released!